Declarative data orchestration: Dagster & Hamilton

Learn how Dagster and Hamilton are similar and different.

In this post, we’ll compare the open-source Python frameworks Dagster and Hamilton covering the following points:

What is a declarative approach and how does it compare to imperative frameworks like Airflow.

How Dagster is a macro-orchestrator and Hamilton is a micro-orchestrator.

Which do you need?

See the full comparison table at the end of the post!

See the code example on GitHub

Imperative vs. Declarative

Orchestration is the coordination and management of tasks to achieve a desired outcome. In the data world, it is used to interact with multiple systems (e.g., a database, a data warehouse, some compute cluster, or a machine learning platform) to streamline and automate tasks. There are two common approaches to describing what these tasks are and how they relate: imperative and declarative. Let’s explain the difference using Airflow, Hamilton, and Dagster.

Airflow (imperative)

Define

Airflow, the canonical orchestrator, is imperative and requires developers to tell it exactly “what to do”. First, you create tasks with the @task decorator:

from airflow.operators.python import get_current_context

@task(params={"external_input": ...})

def A() -> int:

"""Modulo 3 of input value"""

context = get_current_context()

external_input = context["params"]["external_input"]

return external_input % 3

@task

def B(A: int) -> float:

"""Divide A by 3"""

return A / 3

@task

def C(A: int, B: float) -> float:

"""Square A and multiply by B"""

return A ** 2 * BAssemble

Then, organize them in a recipe, which takes the shape of a directed acyclic graph (DAG), using the @dag decorator:

@dag(...)

def abc():

a = A()

b = B(a)

C(A=a, B=b)Execute

The Airflow system then manages a catalog of DAGs and is responsible for scheduling and executing them.

Learn more about Airflow and Prefect (another imperative macro-orchestrator)

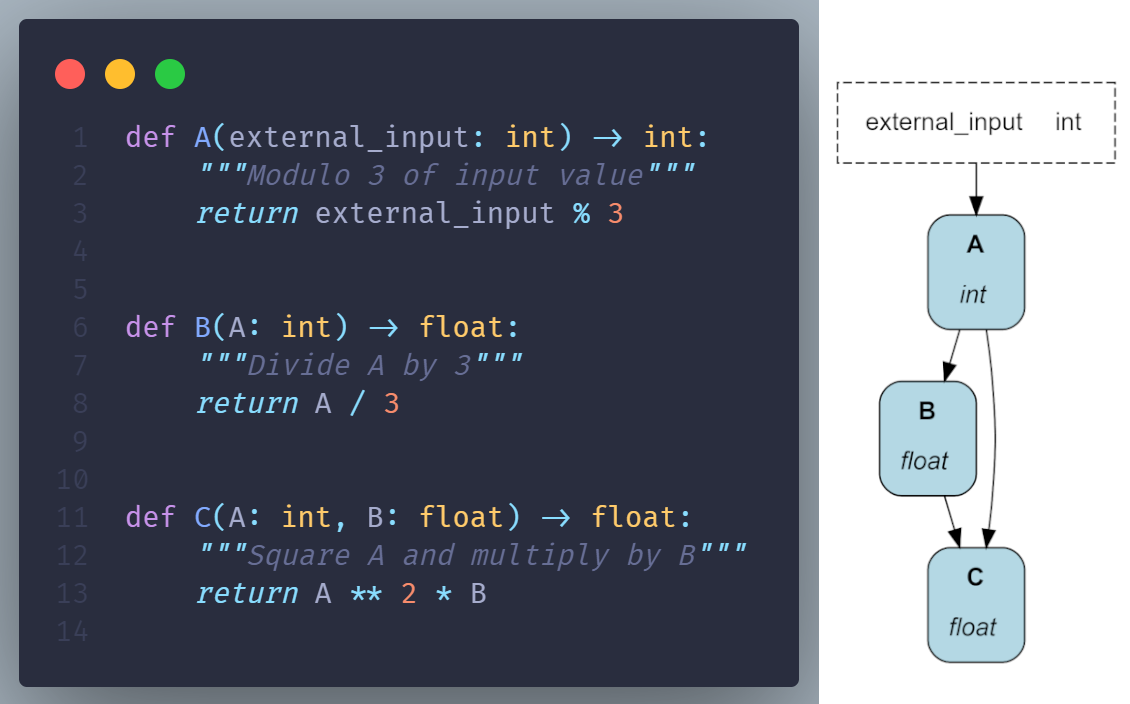

Hamilton (declarative)

Define

Hamilton uses a declarative approach to expressing a DAG. Developers write regular Python functions to declare what can be computed using the function name, in Hamilton they’re called “nodes” (equivalent of a “task”), while each Python function also declares dependencies using the function parameter name and type.

Assemble

Contrary to imperative approaches, Hamilton is responsible for loading definitions and automatically assembling the DAG. This is done through the Driver object:

from hamilton import driver

import definitions # contains node definitions

dr = driver.Builder().with_modules(definitions).build()Execute

While an Airflow DAG is a recipe that has to be executed from start to finish, a Hamilton DAG is more like a recipe book. To execute code, users request nodes and Hamilton determines the recipe to compute them on the fly.

Compute all nodes and only return value for “C”:

# request node named "C"; returns a dictionary of results

results = dr.execute(["C"], inputs={"external_input": 7})Compute only nodes “A” & “B” and return value for “B”:

# request node named "B"; returns a dictionary of results

results = dr.execute(["B"], inputs={"external_input": 7})Compute all nodes and return their values:

# request node named "B"; returns a dictionary of results

results = dr.execute(["A", "B", "C"], inputs={"external_input": 7})Dagster (declarative)

Define

Dagster was launched as an imperative framework (e.g. with the @op decorator) but introduced software-defined assets in 2022 a declarative API reminiscent of Hamilton. “Assets” (equivalent to a “node” or “task”) are defined with @asset:

from dagster import asset, Config

class AssetConfig(Config):

external_input: int

@asset

def A(config: AssetConfig) -> int:

"""Modulo 3 of input value"""

return config.external_input % 3

@asset

def B(A: int) -> float:

"""Divide A by 3"""

return A / 3

@asset

def C(A: int, B: float) -> float:

"""Square A and multiply by B"""

return A ** 2 * BAssemble

Similar to Hamilton, asset definitions are first registered then the orchestrator assembles the DAG:

from dagster import Definitions, load_assets_from_modules

from .assets import definitions # contains assets definitions

defs = Definitions(assets=load_assets_from_modules([definitions]))Execute

Code execution is done through “asset jobs”. To create one, you need to specify all the assets to compute and Dagster will structure the recipe:

from dagster import (

Definitions,

load_assets_from_modules,

define_asset_job

)

from .assets import definitions # contains assets definitions

defs = Definitions(

assets=load_assets_from_modules([definitions]),

jobs=[

define_asset_job(name="abc") # includes all assets by default

]

)A Dagster asset job assumes you need all intermediary results. This is less flexible than Hamilton which allows querying only subpaths of its DAG.

Imperative vs. Declarative Summary

DAG definition

Nowadays, Airflow, Hamilton, and Dagster share very similar APIs to define tasks/nodes/assets — they can be python functions. One differentiator is that Hamilton relies primarily on standard Python constructs (e.g., function signature) instead of framework code via annotations. This makes code easier to reuse outside Hamilton.

While Airflow is purely imperative and Hamilton is declarative, Dagster can be both and may require mixing the two approaches, using @asset, @op, @graph_asset, and @graph_multi_asset decorators; this can get confusing.

DAG assembly

Being imperative, Airflow is the most restrictive and requires developers to specify how each task relates to the other. Hamilton and Dagster take very similar declarative approaches that involve importing and registering a Python module containing nodes/assets definitions.

DAG execution

For Airflow, you specify a DAG, and it will be executed from start to finish.

For Hamilton, once the DAG is assembled, you can request nodes and the Driver will determine the necessary nodes to execute and produce the results.

For Dagster, once the DAG is assembled, you also need to create asset jobs to execute code. This adds a layer to manage.

DAG complexity

Imperative orchestrators (Airflow) are efficient when there are only a few tasks and the recipe is linear. When the number of tasks grows, it becomes difficult to manually specify dependencies and maintain code.

Declarative orchestrators (Hamilton, Dagster) better manage a large number of tasks and complex dependencies by automating the DAG assembly. Consequently, it favors writing smaller functions resulting in code that’s easier to read, test, debug, and maintain.

Macro vs. Micro

Despite both adopting a declarative API, Dagster operates at a macro level while Hamilton at the micro one.

Macro-orchestration

Macro-orchestrators (Dagster, Airflow, Metaflow, Prefect) are platforms. A central instance is deployed on a server as a long-running process and manages the DAG definitions, executor, scheduler, metadata, and UI. Data resides outside of the macro-orchestrator in a database or data warehouse. When a DAG is executed, the orchestrator delegates the task to worker nodes, which load the data, transform it, and store results at the designated location.

Micro-orchestration

Micro-orchestrators are libraries. They are designed to operate in a single Python process without the need for a centralized server. Being a library, they are easy to install and a consequently portable. For instance, you can use Hamilton in a script, notebook, dashboard, Streamlit app, FastAPI server, pyodide browser kernel, and anywhere else Python runs; even in Dagster or Airflow! Hamilton is the only general-purpose micro-orchestrator that covers data, machine learning, and LLM use cases.

Considerations

Use case flexibility

Dagster provides a great platform for creating data artifacts and handling the scheduling of computation. It’s a reasonable choice for a macro-orchestrator.

Hamilton doesn’t provide a platform, but it covers a broader range of use cases. Once you adopt it, you can use it for your data APIs, web apps, and LLM applications. Migrating to it is minimal and it doesn’t restrict you regarding which other tools to use and pairs nicely with any macro-orchestrator.

Data size and computation

To be clear, macro vs. micro has nothing to do with the size of the data or the computation resources required. Both Hamilton and Dagster have integrations for Spark, Dask, Polars, etc. So both could be used for data of any size.

Commitment / Lock-in

Choosing a macro-orchestration framework is an important decision because it will be at the center of your data architecture. It is rare for people to migrate from them. Therefore it’s generally an “all or nothing” proposition.

Micro-orchestration on the other hand influences the way you write code, and represents a much simpler proposition to adopt. With Hamilton, you can get started with a small task, see if you like it, and then continue from there without having to fully commit.

Haven’t tried Hamilton yet? Try Hamilton in your browser on tryhamilton.dev. As we said, it’s just a lightweight library that can even run inside your browser!

What do you need?

With Dagster, you…

Get a platform that can manage infrastructure and scale cloud resources

Have access to a full orchestration toolbox: scheduler, sensors, refresh, backfill

Commit to a data engineering platform with lock-in

With Hamilton, you…

Get a library to standardize how data transformations are expressed

Can develop interactively in a notebook or with the VSCode extension

Adopt a pluggable framework that can expand to your platform needs

Finally, it’s possible to use Dagster and Hamilton together! Since Hamilton is a Python library, you simply have to import it and use it with a Dagster @asset function or @op function. Pick either tool to get started depending on your current needs, and try introducing the other once you are familiar. A sketch of using them both could look like this — run Hamilton within a Dagster function:

@asset

def ml_model(raw_data: pd.DataFrame) -> Model:

dr = driver.Builder().with_modules(...).build()

results = dr.execute(["ml_model"])

return results["ml_model"]

FAQ

Q: What is the main difference between Dagster and Hamilton?

A: Dagster is a macro-orchestrator, meaning it is a full-fledged platform for data orchestration, while Hamilton is a micro-orchestrator, which is a lightweight Python library for defining and executing data pipelines.

Q: When should I use Dagster versus Hamilton?

A: False dilemma. You can use both together. Dagster is a good choice if you need a complete platform for managing data infrastructure, scheduling, and scaling cloud resources. Hamilton is a good choice if you want a flexible and lightweight library for defining and executing data pipelines within your existing Python applications, without the need for a dedicated orchestration platform. Both complement each other and can work well together.

Q: Can I use Dagster and Hamilton together?

A: Yes, since Hamilton is a Python library, you can import and use it within Dagster's @asset or @op functions.

Q: How do Dagster and Hamilton differ in terms of defining and assembling data pipelines?

A: Dagster uses a declarative approach with @asset decorators to define data assets, while Hamilton relies more on standard Python constructs like function signatures. Dagster requires creating separate "asset jobs" to execute pipelines, while Hamilton allows dynamically requesting the execution of specific nodes or pipelines.

Q: What are the benefits of Hamilton's micro-orchestration approach?

A: Hamilton's micro-orchestration approach offers flexibility, portability, and ease of adoption. It can be used in various Python environments like scripts, notebooks, web apps, and even within other orchestrators. It also avoids lock-in to a specific platform.

Q: How do Dagster and Hamilton handle complex dependencies and large numbers of tasks?

A: Both Dagster and Hamilton leverage a declarative approach to automatically assemble and manage complex dependencies between tasks or assets. This approach is generally better suited for handling large numbers of tasks compared to imperative frameworks like Airflow.

Q: What types of use cases can Hamilton handle beyond data pipelines?

A: Hamilton can be used for data APIs, web applications, and large language model (LLM) applications, in addition to data pipelines. Just browse this blog.

Links

📣 join our community on Slack — we’re more than happy to help answer questions you might have or get you started.

⭐️ us on GitHub

📝 leave us an issue if you find something

Other Hamilton posts you might be interested in: