Writing good data analysis code efficiently with Hamilton

A market analysis tutorial with data from People Data Labs

In this blog, we'll cover how Hamilton can help you seamlessly transition from one-off ad hoc analysis to well-structured and reusable code. We'll ground the discussion with some market analysis using data from the provider People Data Labs.

We'll cover:

Limitations of ad hoc data analyses

Move faster by writing modular code with Hamilton

Tutorial: Using Hamilton to analyze company data from People Data Lab

When analyses turn into data pipelines

See this GitHub repository for the full step-by-step tutorial

Ad hoc analysis

By ad hoc analysis, we refer to one-off data analyses that aim to answer specific business questions. Typically, it starts by exploring available data from an extract (e.g., .csv) or a database. Multiple combinations of filters, aggregations, statistics, and visualizations are tried to uncover relationships and provide insights.

Let’s say we’re at a venture capital firm looking to invest in private companies. Rich data enables us to better understand how companies fair, the state of the market, and seize financial opportunities. For example, we could quickly sketch the following code in a script or a notebook to:

get their employee count per month for some period of time to gauge growth / burn rate.

get the distribution of companies across funding stages

import pandas as pd

raw_df = pd.read_json("data/pdl_data.json")

employee_df = pd.json_normalize(raw_df["employee_count_by_month"])

employee_df["ticker"] = raw_df["ticker"]

employee_df = employee_df.melt(

id_vars="ticker",

var_name="year_month",

value_name="employee_count",

)

employee_df["year_month"] = pd.to_datetime(employee_df["year_month"])

df = raw_df[[

"id", "ticker", "website", "name", "display_name", "legal_name",

"founded",

"industry", "type", "summary", "total_funding_raised",

"latest_funding_stage",

"number_funding_rounds", "last_funding_date", "inferred_revenue"

]]

n_companies_funding = df.groupby("latest_funding_stage").count()

print("number of companies per funding state:\n")

n_companies_funding.plot(kind="bar")

print("employee count per month:\n", employee_df)

While this code helps get the job done, it comes with drawbacks:

Readability: hard to understand what it does

Maintainability: difficult to add features or fix bugs

Reusability: no component would be usable in other analyses without work to refactor the code

However, we did answer the business questions and these drawbacks will only occur later. In plenty of scenarios, they quickly matter:

the business team likes the analysis and wants the insights refreshed weekly

the author of this code is resigning and you need to now own it

a new team member needs to be onboarded to this code

it worked on a subset of the data from a CSV, but fails on the company's production data

...

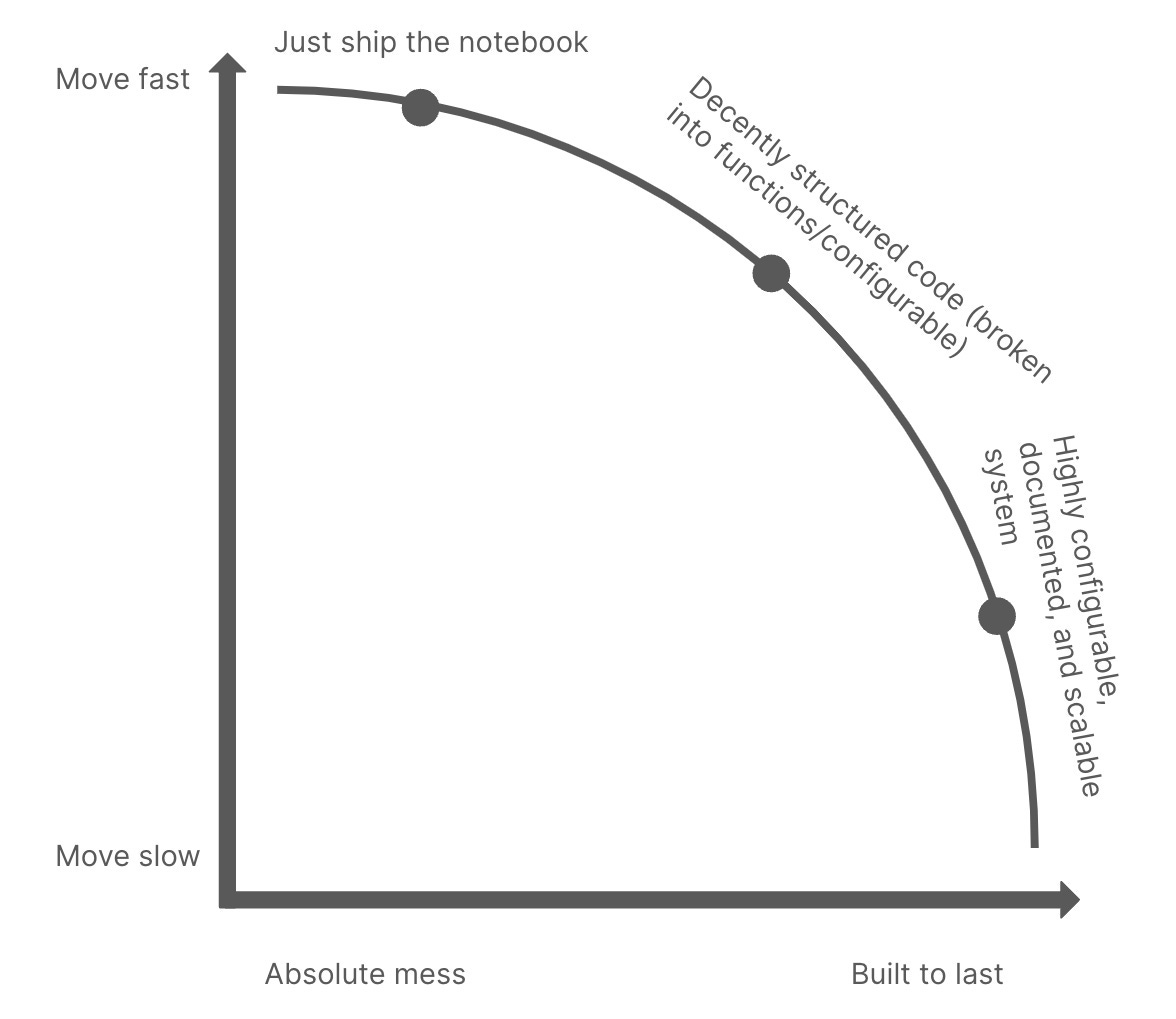

Ultimately, there's a trade-off between delivering value fast and building things to last.

Changing tradeoffs with Hamilton

In reality, businesses will often prioritize delivery speed over robustness posing a challenge to data professionals. What if you could move faster and produce better code? When good tooling and platform teams are effective, we can reshape the tradeoff curve.

Hamilton adheres to this idea and aims to be lightweight to adopt and quickly deliver value. In brief, Hamilton helps you structure and standardize your code using regular Python functions. It can be used both with or without a notebook, and provides a good methodology to go from code in a cell to better structured code. To learn more about Hamilton notebook integrations, see this post:

To get started, we take the previous analysis and breakdown it down into individual steps with a Python function for each. In this case, we have:

loading the data

selecting relevant columns

counting the number of companies per stage

normalizing raw employee data

import pandas as pd

from matplotlib.figure import Figure

def pdl_data(raw_data_path: str = "data/pdl_data.json") -> pd.DataFrame:

"""Load People Data Labs (PDL) company data from a json file."""

return pd.read_json(raw_data_path)

def company_info(pdl_data: pd.DataFrame) -> pd.DataFrame:

"""Select columns containing general company info"""

column_selection = [

"id", "ticker", "website", "name", "display_name",

"legal_name", "founded",

"industry", "type", "summary", "total_funding_raised",

"latest_funding_stage",

"number_funding_rounds", "last_funding_date",

"inferred_revenue"

]

return pdl_data[columns_selection]

def companies_by_funding_stage(company_info: pd.DataFrame) -> pd.Series:

"""Number of companies by funding stage."""

return company_info.groupby("latest_funding_stage").count()

def companies_by_funding_stage_plot(

companies_by_funding_stage: pd.Series

) -> Figure:

"""Plot the number of companies by funding stage"""

return companies_by_funding_stage.plot(kind="bar")

def employee_count_by_month_df(

pdl_data: pd.DataFrame

) -> pd.DataFrame:

"""Normalized employee count data"""

return (

pd.json_normalize(pdl_data["employee_count_by_month"])

.assign(ticker=pdl_data["ticker"])

.melt(

id_vars="ticker",

var_name="year_month",

value_name="employee_count",

)

.astype({"year_month": "datetime64[ns]"})

)

Now, this is where the magic of Hamilton is: we specify the dependencies between steps using the function name and parameter names. For example, the function company_info() has the argument pdl_data, which refers to the function of the same name, pdl_data(). Hamilton automatically builds a “flowchart” or a directed-acyclic graph (DAG) of operations from the function definitions.

Readability is immediately improved by the use of Python functions as it allows for docstrings and type annotations to document your code. Also, you can now visualize the steps of your analysis directly from your code, improving readability and on-boarding / off-boarding. This also makes collaboration in a team and between teams simpler as it standardizes the way code is written.

Maintainability is also improved because bugs and errors can be more quickly traced to a specific step. Also, it's much easier to extend the analysis; simply add another Python function, or modify an existing function for use in certain contexts only.

Also, you can now reuse steps across similar projects because the code isn’t coupled or tied to this specific analysis. Simply import the Python module containing your functions to use them in another context. Hamilton is able to assemble analyses from multiple python modules, allowing you to cleanly curate code and then bring together as needed into a single DAG if desired.

Tutorial: How does employee count and stock value relate?

In this section, we'll cover this tutorial. It uses a dataset from People Data Labs, which notably provides Person enrichment and Company enrichment APIs. It can serve many analytics use cases, notably for building custom audiences, marketing campaigns, and market analysis. We'll be exploring the relationship between the employee count growth rate and the stock value growth rate in private companies (series A to D).

Above is a visualization generated from the code of the final analysis. Nonetheless, at the start of a project, it's useful to try to sketch the DAG of operations to have a roadmap of code to write. For this analysis, we note the following steps:

We need to load two data sources: People Data Lab company enrichment data, stock market data.

filter companies to those at funding round A, B, C, D

join company and employee count data

aggregate by company for the employee count and the stock value growth rate

join company and stock data

Here's the entire analysis code. You don't have to go line by line, but notice how using Python functions helps with readability despite the complexity of the analysis. Look for function names, parameters, and how they relate to the above graph!

But where is the Hamilton code? 🤔 The great thing is that Hamilton doesn't interfere with how you define your analysis. Pass the analysis module (for example analysis.py) to the Hamilton Driver to assemble the DAG. Then, request specific steps by name (e.g., pdl_data, company_info) and Hamilton will automatically determine and execute the necessary operations to get your results.

from hamilton import driver

import analysis # e.g., the name of the file containing

# the analysis code

analytics_driver = (

driver.Builder()

.with_modules(analysis) # pass the analysis code

.build()

)

analytics_driver.display_all_functions() # view the DAG of operations

# values to pass to functions

inputs = dict(

pdl_file="pdl_data.json",

stock_file="stock_data.json",

rounds_selection=["series_a", "series_b", "series_c", "series_d"]

)

# request the node `augmented_company_info`;

# returns a dictionary of results

results = analytics_driver.execute(

["augmented_company_info"], inputs=inputs)

When analyses turn into data pipelines

Answering a business question almost always lead to two more, so finding the right balance between “build to last” and “move fast” usually pays off. Hamilton makes it simple to extend your analysis; just write a new function.

Also, out of all these “one-off” analyses, some will invariably turn into critical insights the business depends on. Using Hamilton from the start of a project sets a light structure to move fast, but also a solid foundation to progressively add tests, data validation, orchestration, monitoring, etc. required for critical applications.

Let’s quickly examine a few ways the code could evolve

Repeat the analysis for public companies

To change the companies include, change the round_selection input to “post IPO equity”. The filter will applied in the selected_companies() function as indicated by the graph. This is a 30 second change + time to run the code.

inputs = dict(

pdl_file="pdl_data.json",

stock_file="stock_data.json",

rounds_selection=["post_ipo_equity"]

)

results = analytics_driver.execute(

["augmented_company_info"], inputs=inputs

)Repeat the analysis on fresh data

Download the fresh data and specify its path as input. Here, we set pdl_file and stock_file to their “new” values. This is a 30 second change + time to download files and run the code.

inputs = dict(

pdl_file="new_pdl_data.json",

stock_file="new_stock_data.json",

rounds_selection=["series_a", "series_b", "series_c", "series_d"]

)

results = analytics_driver.execute(

["augmented_company_info"], inputs=inputs

)Get data directly from the People Data Labs API

Previous, our functions pdl_data() read a JSON file. We can replace the function that sends an HTTP request to the Company enrichment API. You can now query data directly from the provider by passing a query and your API key. This took maybe 3 minutes.

import requests

from hamilton.function_modifiers import config

def pdl_data__api(api_key: str, query: dict) -> pd.DataFrame:

"""Query the People Data Labs API"""

PDL_URL = "https://api.peopledatalabs.com/v5/company/enrich"

HEADERS = {

'accept': "application/json",

'content-type': "application/json",

'x-api-key': api_key

}

response = requests.get(PDL_URL, headers=HEADERS, params=query)

return pd.DataFrame().from_records([response.json()])Conduct data quality check

We want to make sure we have no null values in our employee count because that would introduce errors in our calculations. With Hamilton, you just need to add a @check_output(allow_nans=False) decorator to validate your step result (nans refers to NaN, a “not a number” value). This took 30 seconds.

Learn more about data quality in Hamilton.

@check_output(allow_nans=False)

def employee_count_by_month_df(pdl_data: pd.DataFrame) -> pd.DataFrame:

...Cluster companies with machine learning

Nothing stops you! Just import scikit-learn and start adding more functions

Conclusion

Data scientists need to deliver business value, but writing brittle code fast becomes costly later. Adopting Hamilton changes this tradeoff because you can write high quality code faster, and have a clear path to iterate over follow-up questions and improve robustness when needed. Readability and reproducibility aren’t luxuries. They’re requirements.

Hamilton can also help you with feature engineering, machine learning, and LLMs, checkout the etc. (more examples here).

Also, thanks to People Data Labs. This article was a lot of fun to write because of a rich dataset generously provided by them. You can get started with free credits.

We want to hear from you!

If you’re excited by any of this, or have strong opinions, drop by our Slack channel / leave some comments here! Some resources to help you get started:

📣 join our Hamilton community on Slack — need help with Hamilton? Ask here.

📝 leave us an issue if you find something

📈 check out the DAGWorks platform and sign up for a free trial

We recently launched Burr to create LLM agents and applications