Tools can multiply or divide productivity

How Hamilton facilitates the data science & engineering routine

A few weeks ago, I presented at the MLOps Community meetup in Montréal my talk titled Think micro: Why modular machine learning pipelines are better. It discusses the challenges of data science (and related fields) and how Hamilton attempts to solve them. Here’s a summary in 5 points.

1. Data science follows a routine

Writing code for data science is iterative. You never have a full blueprint of what to build on day one, and requirements are bound to change over time. Each iteration follows a certain set of steps:

You receive a business question:

How are sales looking?

Can we improve forecasting performance using XGBoost?

Are local LLMs as performant as OpenAI?

Form an hypothesis

Get the data

Implement code for your analysis

Run the analysis

Fix bugs 🦟

Explore and report results

Repeat

It’s critical to recognize that this is the nature of the job, and it won’t change. This holds true whether you’re doing business analytics, “traditional” machine learning, time-series forecasting, large language models (LLMs), and retrieval augmented generation (RAG) systems.

2. Code needs maintenance

Each time you answer a new business question and go through an iteration of the data science routine, you’re adding code to your project. Without a principled approach, it starts to look like this:

However, code needs to be readable to become easy to debug, update, and reuse.

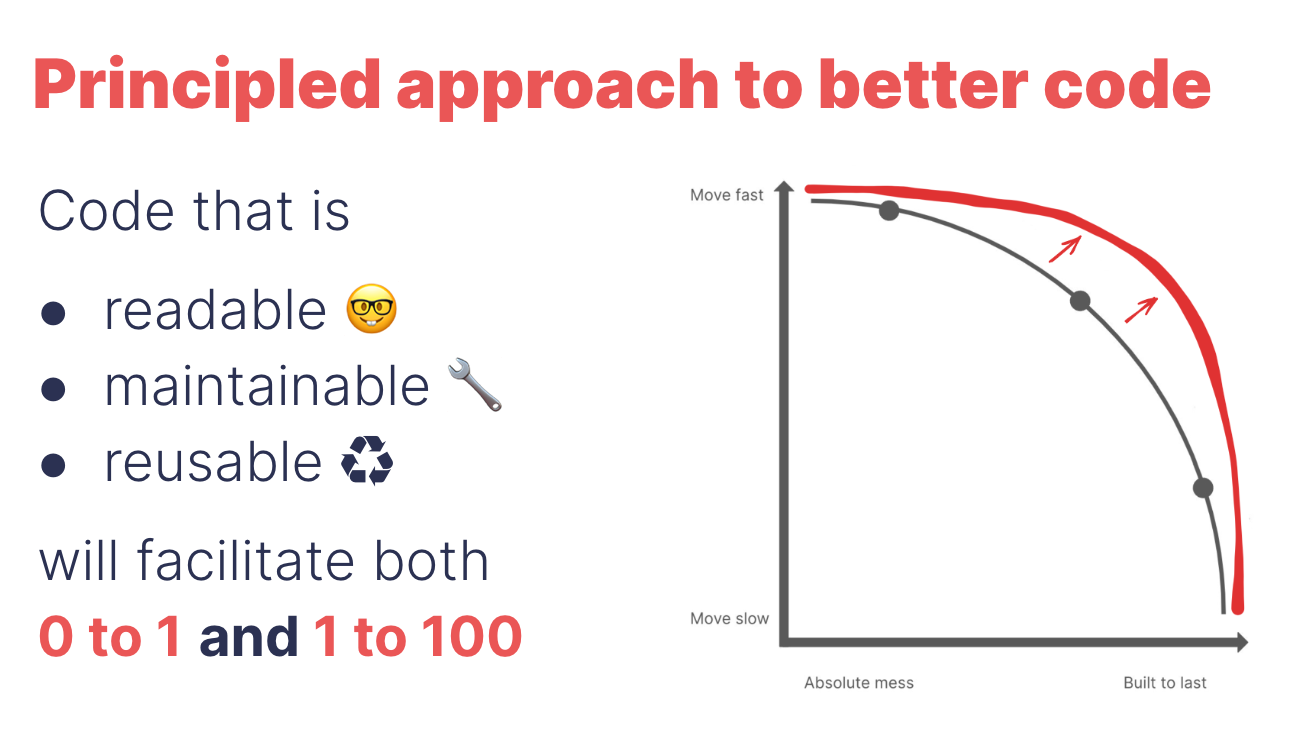

3. Move fast vs. Build to last

Data scientists are always faced with a trade-off between:

Move fast. Speed through the data science routine to answer a question

Build to last. Write more robust code using CI, testing, validation. Your system includes containers, experiment tracking, logging, execution observability, etc.

Businesses typically care most about speed. Moving fast all the time allows you to answer questions quickly, but each “data science iteration” adds code that will slow you down on your next iteration. It’s creating technical debt. Building to last all the time may be a waste of effort. Some questions are “one-offs”, and not all of them require production-grade code.

The key is that neither is better in essence and the development approach you take should depend on the requirements, and how you manage the world as they evolve. One should also consider the cost over time.

4. Tools reshape the trade-off

The thesis of the talk is that data tools can reshape the “move fast vs. build to last” trade-off, for better or for worse.

With good tools, each project is completed faster and more robustly, benefiting from previous efforts. At a system level, this means less failures points and easier maintenance.

With bad tools, project move slower because of the components glued together, introducing failure points and reducing observability. Each tool adds a premium to the project’s maintenance.

Reshaping the trade-off also means moving more effectively along the curve. In practice, this is a critical trait. How fast can you go from notebook to production REST API when requirements change!

5. How Hamilton reshapes the trade-off

Hamilton is a lightweight Python library to write data transformations as a directed acyclic graph (DAG). It works over two layers: definition and execution.

Dataflow definition

Hamilton helps you write better code with a simple principled approach. Each functions define “nodes” or “steps” in the dataflow. The function name is the name of the node and its parameters specify the other nodes it depends on.

This minimal structure allows you to move through the data science routine swiftly. Being decoupled from “dataflow execution” means you can focus on solving business questions before being concerned with production requirements.

This standardization helps with readability and allows Hamilton to automatically assemble functions into a DAG that can be visualized. It also reduces the accumulation of technical debt by making refactoring & additions straightforward.

Dataflow execution

Once you defined a dataflow, Hamilton makes it easy to opt-in the feature you need to meet your requirements, whether it’s:

Unit testing and dataflow “path” testing

Execute using Spark, Ray, Dask, async, threading, multiprocessing

Run in an orchestrator (Airflow, Metaflow, Dagster) or serverless (AWS Lambda)

and more!

You shouldn’t have to care about this day 1, but you will inevitably have to, and Hamilton provides a clear path to it.

I’m not the only one that thinks this way

I couldn’t agree more with the most recent video from Internet of Bugs on “clean code”:

The point of code maintainability is to make the code easier to work on for someone who's unfamiliar with it. Not to make it easier to write in the first place.

It's not about some hypotheticals "How changes might get made in the future". It's about "How do you find the places that changes need to be made now" and "How do you assure that those changes are isolated".

To his last sentence, Hamilton is literally designed to make that as simple as possible. The use of type-annotated and documented functions makes it easy to understand and extend your codebase. If you encounter a bug/issue/error, it’s straightforward to identify whether it was your Hamilton dataflow code, and if so then to quickly find the corresponding code for the output/error in question.

Closing thoughts

I’m happy my talk was so well-received. Several data scientists and engineered shared how much it echoed their reality.

⭐Consider starring Hamilton

If you’d like to learn more about Hamilton please try:

You might also be interested in the articles below that overlap with the themes of my talk!

Writing good data analysis code efficiently with Hamilton

In this blog, we'll cover how Hamilton can help you seamlessly transition from one-off ad hoc analysis to well-structured and reusable code. We'll ground the discussion with some market analysis using data from the provider People Data Labs. We'll cover:

How well-structured should your data code be?

In this post we discuss the inherent tradeoff between moving quickly and (not) breaking things. While it is geared towards those in the data space, it can be read and enjoyed by anyone who writes code or builds systems, and feels that they are constantly under pressure to move quickly. We use the framing of a Data Scientist as someone that works in a se…