Chat with your webpage with Scrapegraph, Burr and Lancedb

In this post we are going to walk through the example of using the scrapegraph SDK with LanceDB and Burr. While Scrapegraph and Burr already have an integration layer, this is a very powerful, simple approach using the scrapegraph SDK.

This is written in collaboration with Scrapegraph, and adapted from the example on their repository.

First we will go over the tools — Burr, Scrapegraph, and LanceDB. We will next walk through the code, show how it looks in production, and provide some further reading.

The tools

ScrapeGraph

ScrapeGraphAI is a Python library for web scraping that leverages LLMs and direct graph logic to build efficient scraping pipelines for websites and local documents, including XML, HTML, JSON, and Markdown files. With over 16k stars on GitHub and an active Discord community, it has become a popular tool for developers. The library also offers a hosted API, enabling access to its most advanced scraping services, including:

SmartScraper: extracts structured content from any webpage given a URL, a prompt, and optionally, an output schema.

LocalScraper: works similarly to SmartScraper but is tailored for offline HTML files.

The API is production-ready and includes features such as automatic proxy rotation, anti-bot solutions, and fixed output response verification. In the example below, we’ll demonstrate how to use the Markdownify service from the hosted API. While the service includes a free tier, you can always opt for the OS version.

Burr

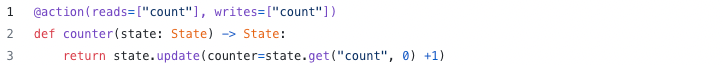

Burr is a lightweight Python library you use to build applications as state machines. You construct your application out of a series of actions (these can be either decorated functions or objects), which declare inputs from state, as well as inputs from the user. These specify custom logic (delegating to any framework), as well as instructions on how to update state. State is immutable, which allows you to inspect it at any given point. Burr handles orchestration, monitoring, persistence, etc…).

You run your Burr actions as part of an application – this allows you to string them together with a series of (optionally) conditional transitions from action to action.

Burr comes with a user-interface that enables monitoring/telemetry, as well as hooks to persist state/execute arbitrary code during execution.

You can visualize this as a flow chart, i.e. graph / state machine:

And monitor it using the local telemetry debugger:

While the above example is a simple illustration, Burr is commonly used for AI assistants (like in this example), RAG applications, and human-in-the-loop AI interfaces. See the repository examples for a (more exhaustive) set of use-cases.

LanceDB

(From the LanceDB github) LanceDB is an open-source database for vector-search built with persistent storage, which greatly simplifies retrieval, filtering and management of embeddings.

The key features of LanceDB include:

Production-scale vector search with no servers to manage.

Store, query and filter vectors, metadata and multi-modal data (text, images, videos, point clouds, and more).

Support for vector similarity search, full-text search and SQL.

Native Python and Javascript/Typescript support.

Zero-copy, automatic versioning, manage versions of your data without needing extra infrastructure.

GPU support in building vector indices.

Ecosystem integrations with LangChain, LlamaIndex, Apache-Arrow, Pandas, Polars, DuckDB and more on the way.

LanceDB's core is written in Rust and is built using Lance, an open-source columnar format designed for performant ML workloads. We won’t be leveraging the full extent of its capabilities in this post (scale/multi-modality) — but it makes an ideal candidate for tutorial as it is open-source and easy to get started with.

Chat with your Webpage

Now that we’ve gone over our tools, let’s talk about how we’ll use them! Our goal is to define a flow (Burr application) that:

Fetches markdown from webpages (scrapegraph)

Chunks the content and stores it in a vector store (lancedb)

Allows to query the db and generate an answer using a LLM

We can see all of this as Nodes connected together in a Graph, where the Nodes are the actions we want to perform.

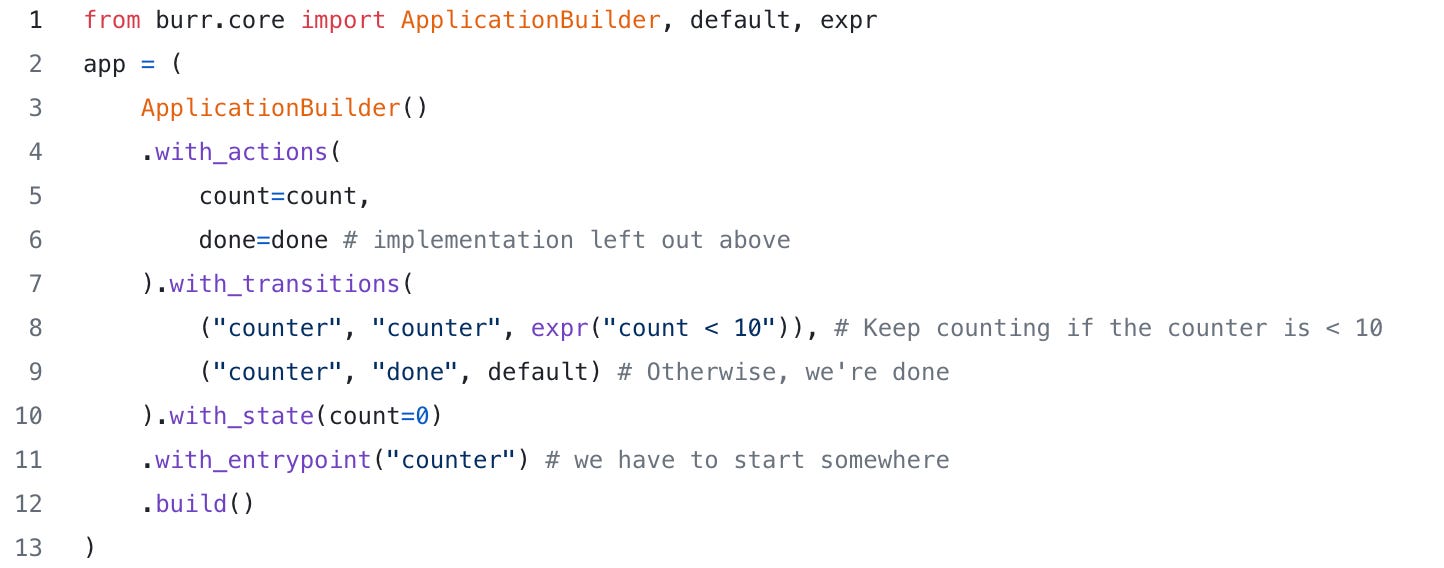

First, we will instantiate a scrapegraph client/set the logging level:

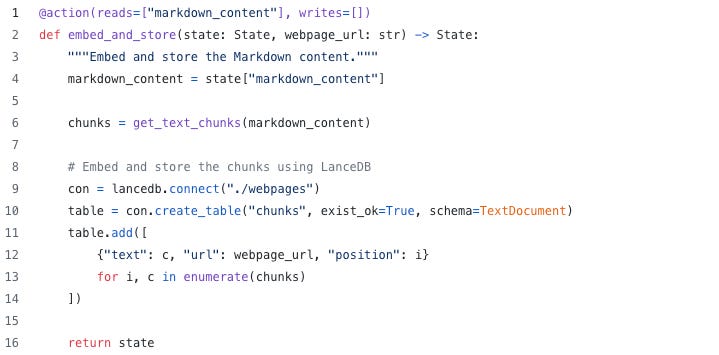

We will then use Burr’s functional API to define some actions. Let’s start by scraping a webpage:

Then, let’s define some helper functions/data models to hold chunks from our page in the lancedb vector store, as well as some tokenizing utility functions to parse our text:

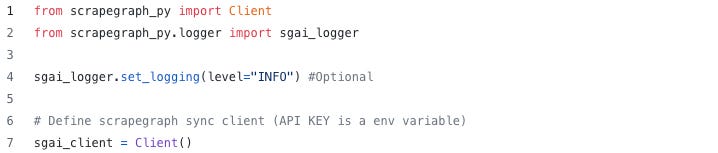

Now, let’s define our action — it creates a local vector store if it can’t find one, and adds the chunks to the chunks table. Note that this is parameterized — the user provides the URL for a webpage, which gets used for scraping:

Our last action will be asking a question of the page — taking in a user query and outputting the response. This is simple — it passes the context into the OpenAI API, and returns the contents of the response.

To ensure visibility, we turn on opentelemetry logging (so Burr can pick up traces), and wire together our actions in an application!

Finally, we can run it, passing the query and the webpage URL:

As you can see, it is completely correct:

To debug, we can examine the trace in the Burr UI — you can see every step of the process, as well as attributes/traces for the lance/OpenAI queries:

Wrapping Up

While we went over a fairly simple example, there’s a lot more we can do. In particular, we can:

Bypass loading the page if we already have loaded it

Provide more interactive query tooling — ask multiple questions of the same page

Log to a Burr cloud instance to get persistence telemetry

Additionally, log to any opentelemetry provider

Use SmartScraper to extract a summary and some keywords for each chunks to use as metadata in the vector store, to improve retrieval

Semantic crawling to fetch multiple webpages from the same domain and chat with the whole website

If you’re interested in reading more, or want to get started on these, don’t hesitate to reach out! Here are some more resources to get you started:

🚀 Get your API Key: ScrapeGraphAI Dashboard

🐙 GitHub: ScrapeGraphAI GitHub

⏩ Burr: Github

🛢️ LanceDB: Github

Just coming from a session where Stefan Krawczyk introduces burr shortly, your blog post fits perfectly. Very interesting!