Burr – Develop Stateful AI Applications

Build, debug, and monitor anything from chatbots to agents (+more!)

TL;DR

Managing state for AI applications is hard, especially when it’s used to make decisions that impact your users. We built Burr to make this easier — it comes with a powerful API for building applications as state machines, a host of integrations, and a telemetry UI for debugging/tracking in production. We’ll talk through the motivation/reasoning behind it, then implement a simple chatbot. If you just want to get started, navigate to the a state machine for AI (and more!) section.

Managing state is hard

One of the most important considerations in building robust applications is managing state. This is generally a hard problem (after, perhaps, cache invalidation and naming things). Hence the field of databases exists — almost everyone needs to persist the state of their computer program for future use, debugging/inspection, and failure tolerance.

State management gets even more difficult in the rapidly developing field of AI applications, where the state is fed to complex models to make decisions on what the application does next. Let’s think about what it might take to implement a simple chatbot, such as the ChatGPT web UI.

The ChatGPT web UI has:

The left hand side that shows past conversations.

The main UI pane where interaction with the current conversation occurs.

Behind the scenes the chat bot (might) select and delegate to a variety of sub-models (code, image generation, etc…) to accomplish what was requested.

More specifically – here are a few requirements:1

Track/store all user sessions (conversations)

Use the result of internal (decision) models to determine which models to query:

Is this question something the model feels comfortable answering? If so, ask it, if not, return an error…

Should I write some code to answer this question?

Should I display an image to answer the question?

Delegate, wait for response, stream it back, and store it in session history

Track context and pass it back to the model

Remember the chat history

Attempt to extend the context window by remembering salient points from further back

Attempt to extend even further with RAG (not ChatGPT necessarily, but definitely standard for chatbots)

Provide the ability to annotate the interactions for evaluation and fine-tuning

Monitor for anomalies, failure cases, etc…

Concerns like these show up all the time in applications that leverage foundational models, and getting them right is really important. The context, history, and state all determine the inputs to a variety of models. Garbage in = garbage out, and garbage out = user product churn. Due to the stochastic and prompt-sensitive nature of LLMs a mistake can be tremendously hard to debug. Without visibility into the inputs of your models (which all derives from the state of your application), you have no sense of why your application made the decision it did. Not only is it complicated to develop your app, but debugging involves a ton of post-hoc printlines, logging, and integrations with various tracing frameworks. This is why many AI developers really just want to be shown the prompt – if you understand the inputs and outputs of every aspect of your application, it makes things much easier to debug.

State machines to the rescue

State machines are a computational model you can leverage to help handle these concerns. A state machine represents your program as being in one of a set of (finite or infinite) states at any given point, and models the transitions between them. While the majority of computation can be (and often is) implicitly modeled as a state machine (ranging from turing machines to CPUs and even quantum computing), only a few fields explicitly model their programs as state machines. They are particularly favored in gaming and frontend web-development where the state can be wide-ranging and complex, and often very difficult to implement without modeling control flow with state and state transitions.

Explicitly representing applications as a state machine affords you the following two advantages:

Everything becomes functions of state -> state, implying

Every step is unit-testable

You can understand exactly the impact that step had on state

You can rewind your application to start at an error point, or debug a particularly complex decision it made

State can naturally be persisted and loaded outside of user code, implying:

Your code does not need to handle persistence inside its logic – reducing cognitive burden of getting an application to production

You can use a variety of off-the-shelf state persistence solutions (or build your own!)

The challenges we presented above all start getting simpler when you think this way. More on what that looks like shortly.

A state machine for AI (and more!)

We created Burr because state machines are complicated to work with but provide a lot of value. Burr makes expressing them to build AI applications more natural, allowing a developer to unlock the values above.

To walk through Burr, we’re going to create a bare-bones chatbot, expand it, and show how you would use Burr to get this into production. If you don’t want to read here’s a video walkthrough of the below using this notebook.

Building a simple chatbot

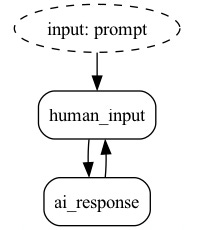

The simplest chatbot has two steps:

Human input

AI output

We’re going to leverage the OpenAI API to handle this for us, but this should be relevant for any foundational model vendors, as well as running your own/OS models in house, etc…

What does this look like in Burr? First, we define each one of those steps as an action. Burr has two APIs to specify actions – function and class-based. We’re going to work with the function-based API. You can think about an action as having two responsibilities:

run– this is where the heavy loading isupdate– updates the state

run produces a result (represented as a dictionary), and update takes that result and merges it back into the overall state. We break this into two steps to allow an action to have semantic outputs along with the state they produce. Updates then use the State API, which allows you to make updates to an immutable state object (returning a new one). In the functional API, we do both at the same time — our function returns a tuple of (result: dict, state_with_updates: State).

Actions declare the subset of state they read and write from as fields – which improves readability of the code and enables the framework to perform validations/runtime checks.

Lastly, actions can declare external inputs – this is something that the user is required to pass in prior to action execution. In the functional API these are represented as function parameters, which you inject while running the application.

With the functional API, our actions look like this:

@action(reads=[], writes=["prompt", "chat_history"])

def human_input(state: State, prompt: str) -> Tuple[dict, State]:

chat_item = {

"content": prompt,

"role": "user"

}

# return the prompt as the result

# put the prompt in state and update the chat_history

return (

{"prompt": prompt},

state.update(prompt=prompt).append(chat_history=[chat_item])

)

@action(reads=["chat_history"], writes=["response", "chat_history"])

def ai_response(state: State) -> Tuple[dict, State]:

client = openai.Client()

content = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=state["chat_history"],

).choices[0].message.content

chat_item = {

"content": content,

"role": "assistant"

}

# return the response as the result

# put the response in state and update the chat history

return (

{"response": content},

state.update(response=content).append(chat_history=chat_item)

)Each action computes something and adds it to the chat history. It also writes to the response + prompt fields, which we can use for debugging. Note you have a few choices as to how to model it — you can store it in chat history or just store the most recent. The approach of storing the chat history is nice as it allows us to remember the entire history and pass it directly to the frontend to render (allowing a react-like framework to do so with ease).

Let’s tie this together into an application – this is how Burr navigates actions. We use the ApplicationBuilder2, to which we add:

The actions, with names

An initial state

Transitions between actions

The entrypoint (first action) – the app needs to start somewhere

app = (

ApplicationBuilder().with_actions(

human_input=human_input,

ai_response=ai_response

).with_transitions(

("human_input", "ai_response"),

("ai_response", "human_input")

).with_state(chat_history=[])

.with_entrypoint("human_input")

.build()

)Note that that this state machine is non-terminating — we keep circle back for the next input. This allows us to build a continuous loop of human interaction. Burr makes it easy to display this as a graph (see docs for more).

Finally, let’s run our application – we call out to the application’s run function, which enables us to execute until a certain condition has been met. In this case, we’ll halt the execution after the ai_output step, and grab the state for downstream use.

action, result, state = app.run(

halt_after=["ai_response"],

inputs={"prompt" : "Who was Aaron Burr?"}

)

render(state["chat_history"])And it’s as easy as that!

Adding a decision-making step

While checking inputs for safety can be a controversial topic, most chatbots have some desire to guard against subversive users. So, let’s add a step that determines safety. Although calling out to the chatGPT API does this for you, we’re going to add a (toy) heuristic to demonstrate. If unsafe is included in the prompt, we decide we cannot answer it. We will add:

An action to decide whether this is safe or not to answer

A “unsafe response” action that states that this is not save

@action(reads=["prompt"], writes=["safe"])

def safety_check(state: State) -> Tuple[dict, State]:

safe = "unsafe" not in state["prompt"]

return {"safe": safe}, state.update(safe=safe)

@action(reads=[], writes=["response", "chat_history"])

def unsafe_response(state: State) -> Tuple[dict, State]:

content = "I'm sorry, my overlords have forbidden me to respond."

new_state = (

state

.update(response=content)

.append(

chat_history=[{"content": content, "role": "assistant"}])

)

return {"response": content}, new_stateNext, let’s adjust the application to call out accordingly – in this case we’re using conditional transitions:

from burr.core import when

safe_app = (

ApplicationBuilder().with_actions(

human_input=human_input,

ai_response=ai_response,

safety_check=safety_check,

unsafe_response=unsafe_response

).with_transitions(

("human_input", "safety_check"),

("safety_check", "unsafe_response", when(safe=False)),

("safety_check", "ai_response", when(safe=True)),

(["unsafe_response", "ai_response"], "human_input"),

).with_state(chat_history=[])

.with_entrypoint("human_input")

.build()

)

Finally, we call it to stop after either of two response actions.

action, result, state = app.run(

halt_after=["ai_response", "unsafe_response"],

inputs={"prompt": "Who was Aaron Burr, sir (unsafe)?"}

)

render(state["chat_history"])Our state machine is now more complicated, but it is also more powerful! Note that this is the tip of the iceberg. Say we have multiple AI models (or even business logic) we want to delegate to, and one model that selects the right one for the task (in the case that you’re building a quick and dirty mixture of experts), you might have a state machine that looks something like this:

See the examples from the github repository for a more detailed implementation.

Hopefully this has shown you that building a state machine can make logic easier to handle and represent – let’s talk about the additional benefits it gives you that help you take your app to production.

Where Burr can take you

Running in a GUI

As Burr is dependency-free python, it can be put inside any server, web-app, streamlit app, fastui app, etc… It’s just a function-call. See the chatbot example in the demo app for more.

Tracking

Burr comes out of the box with a tracking UI – this allows you to run any Burr application and watch it in real time. It requires a small change to the code.

app = (

...

.with_tracker(

"local",

project="burr-blog",

)

.build()

)When we run it, we can now see it pop up in the UI and watch it progress through the steps. We can go back in time, examine the state at any given point, and track the results:

Persistence

If we want to save it to a database, we can hook into one of the provided state persisters. To keep it simple, let’s log to sqlite using a wrapper around the stdlib library sqlite3 :

from burr.core.persistence import SQLLitePersister

app = (

...

.with_state_persister(

SQLLitePersister(db_path="my_database")

)

.build()

)Then, let’s decide we want to run it in production, and change it to PostgreSQL!

from burr.core.persistence import PostgreSQLPersister

app = (

...

.with_state_persister(

PostgreSQLPersister.from_values(

PG_DB,

PG_USER,

PG_PASSWORD,

PG_HOST,

PG_PORT

)

).build()

)The persistor/ApplicationBuilder APIs have the ability to initialize from state and rewind as well – read more in the documentation.

The persistence API is extensible, meaning you can add your own persisters that customize:

The saving database

The schema you use

This allows you to integrate with your app easily and transform the state into your preferred schemas to store and reload it. State persistence is naturally keyed by an application ID and a partition key, which allows you to query application data in an ergonomic manner (although the indices are, of course, customizable).

Other features/coming soon

Burr comes with a variety of capabilities, and we’re building more by the day!

Asynchronous APIs — run more efficiently and ergonomically in a web service

Tracing – view substeps timing and traces

Metadata/Annotation – attach data to actions/traces inside actions – query later for evaluation.

Integrations with popular frameworks

Streaming integration – fully supported streaming outputs

[Coming Soon] Typescript support — our intention is to have a fully up-to-date compatible typescript version

Looping back

Let’s revisit the complexities we brought up before:

Track/store all user sessions (conversations) ⇔ persist to state

Use the result of internal models to determine which models to query ⇔ state/edge transitions

Delegate, wait for response, stream it back, and store it in session history ⇔ streaming actions with state update

Track context and pass it in ⇔ state/edge transitions + state update to chat history/others

Annotate data for evaluation and fine-tuning ⇔ metadata + annotations

Monitor for anomalies, failure cases, etc… ⇔ telemetry + tracking

Getting started + next steps

To get started with Burr, first install with pypi:

pip install “burr[start]”Then run the tracking server, which will open up a browser window with a chatbot/demo (note you need OPENAI_API_KEY in your env to add new chats, but you can still explore the chat history and interact with telemetry if you don’t have it).

burr-demoThen, read our guide on getting started, and build your own applications!

Endless possibilities

While the example here is geared towards interactive conversations, we anticipate Burr as useful for far more than just AI applications. We anticipate Burr will provide value for:

Simulations — manage time-series forecast and state transitions through actions

Agents — actions wrap tools, conditions elect what to do

ML training routines — train an epoch, evaluate metrics, and decide how to proceed

And more!

We’re really excited about where our library is going. What’s up next? Quite a bit:

Testing/eval curation – making the process of extracting data for application eval easier

More integrations with popular frameworks so you can chain together actions easily

Integration with serving frameworks – easily deploy your application as a FastAPI app.

Hosted cloud execution, telemetry, and storage.

We’re building in public, and want the community to participate. So:

Open up an issue or a discussion if you want help

Look through the issues, submit a PR, or reach out (founders at dagworks dot io) if you want to contribute

Please give us a star if you like what we’re building

Reach out if you want help getting started/applying Burr to your use-case

Check out Hamilton if you need a simple way to implement actions (or your application carries no state, handling flows/chains)

Thank you so much for reading. We hope you have as much fun building with Burr as we have had developing and playing with it!

FAQ

Q: What is Burr?

A: Burr is a library created by DAGWorks to help manage state when building AI applications. It allows you to model your application logic as a state machine, with transitions between different actions/states based on conditions.

Q: Why use a state machine approach for AI applications?

A: Managing state is very important but challenging for AI applications, as the app state determines the inputs to models which can greatly impact outputs. Explicitly modeling as a state machine makes this easier to reason about, test, debug and persist state across sessions.

Q: What are some key features/benefits of using Burr?

A: Some highlighted benefits are the ability to unit test actions, rewind state for debugging, persist/reload state easily, visualize the state machine, hook into telemetry/tracking, and integrate with popular model serving frameworks.

Q: How do I get started with Burr?

A: Install Burr from PyPI, run the demo tracking server, then follow the getting started guide to build your first Burr application modeling your logic as actions and state transitions.

Q: What types of applications is Burr useful for?

A: While the examples focus on interactive conversational AI, Burr can be useful for any application that needs to manage and persist state, like simulations, agents/robotics, ML training pipelines and more.

Q: Can Burr handle streaming responses?

A: Yes. You would annotate functions slightly differently. Something like the following:

@streaming_action(reads=["prompt"], writes=["prompt"])

def streaming_chat_call(state: State, **run_kwargs) -> Generator[dict, None, Tuple[dict, State]]:

client = openai.Client()

response = client.chat.completions.create(

...,

stream=True,

)

buffer = []

for chunk in response:

delta = chunk.choices[0].delta.content

buffer.append(delta)

yield {'response': delta}

full_response = ''.join(buffer)

return ({'response': full_response},

state.append(response=full_response))Then you would call `application.stream_result()` as part of your control flow, which would give you back a container object that you can stream to the user:

streaming_result_container = application.stream_result(...)

action_we_just_ran = streaming_result_container.get()

print(f"getting streaming results for action={action_we_just_ran.name}")

for result_component in streaming_result_container:

print(result_component['response'])# this assumes you have a

# response key in your result

# get the final result

final_state, final_result = streaming_result_container.get()Its nice in a web-server or a streamlit app where you can use streaming responses to connect to the frontend. Here's how we use it in a streamlit app.

Q: How can I contribute to or provide feedback on Burr?

A: Open an issue or discussion on the Burr GitHub repo. You can also submit PRs, or reach out to the founders@dagworks.io email. Starring the repo also helps!

Note, these assumes much of what can (and likely does) occur in the model takes place at the application layer — in general, there’s an isomorphism between what’s inside the black box and outside. This mental model should be straightforward to adjust for any configuration, but this illustrates it nicely.

Call us old-fashioned java developers — we’ve thrown aside opaque fancy syntax — pipes, shift operators, and other fancy stuff in favor of the good old builder pattern.