Building a conversational GraphDB RAG agent with Hamilton, Burr, and FalkorDB

A quick example showing how to use FalkorDB, a GraphDB, with Hamilton and Burr to facilitate a conversational RAG agent answering questions over UFC fight data.

Introduction

In the rapidly evolving landscape of data-driven applications, the integration of the right tools can unlock new potentials for interactive and intelligent systems. In this blog post, we explore how to build a conversational Retrieval-Augmented Generation (RAG) agent by leveraging Hamilton and Burr, production ready tools for lightweight data transformation and agent orchestration, along with FalkorDB, a cutting-edge Graph Database. By using some UFC fight data, we will demonstrate how these tools can be seamlessly combined to create an agent capable of answering complex queries. We will use Hamilton to define a modular pipeline for transforming and ingesting data into FalkorDB, and then Burr to manage the conversational piece that will talk to an LLM as well as call out to FalkorDB.

This post is for those looking for a Python example of conversational RAG using a GraphDB that could be easily taken to production. We’ll first walkthrough the tools and then dive into how they all work together.

Assumption: we assume the reader is already familiar with basic RAG concepts. If not we direct the reader to prior posts:

What is a GraphDB & FalkorDB?

A Graph Database (Graph DB) is a type of database designed to represent and store data in the form of a graph. Specifically graph here refers to the computer science term. For those unfamiliar with this specific concept, let's imagine you have a group of friends, and each friend knows other people. In a graph database, each friend is represented as a "node” (i.e. a round circle), and the relationships between them (like "knows" or "is friends with") are represented as "edges" connecting these nodes.

So, in a graph database:

Nodes are like individual people or entities.

Edges are the connections or relationships between those people or entities.

Properties are details about nodes and edges, like a person's name, age, or how long two people have been friends.

This structure makes it easy to map and explore complex relationships and patterns in data, much like how you can quickly see who is connected to whom in your social network. It's particularly useful for applications where relationships between data points are as important as the data itself, such as recommendation systems, fraud detection, and network analysis; and as we’ll see here UFC fight data.

What is FalkorDB?

FalkorDB is an implementation of a GraphDB. FalkorDB takes a unique approach to in-memory graph representation and traversal by leveraging sparse matrices to represent the underlying graph topology and evaluating linear algebra expressions to answer queries. This approach results in both efficient information processing and accelerated information retrieval. These capabilities form the foundation of GraphDB RAG (or GraphRAG for short), a solution that addresses the limitations of traditional Vector RAG for Large Language Models (LLMs). Ultimately, FalkorDB translates this efficiency into ultra-low latency knowledge access for LLMs, providing a smooth user experience.

How do you query/talk to a GraphDB?

To interact with FalkorDB you use the Cypher query language. It is a language designed specifically for interacting with graph databases. You can think of it as a language similar to SQL (which is used for traditional relational databases), but tailored for working with the nodes and relationships in a graph database. Specifically, it allows you to specify what you're looking for in the graph and how to navigate through the nodes (entities) and relationships (connections) to find it.

How to Think About the Cypher Query Language:

Nodes and Relationships: Using our example from above, imagine you have a network of friends. Each friend is a node, and each friendship is a relationship. Cypher helps you ask questions like, "Who are the friends of my friends?" or "Which friends live in the same city?"

Pattern Matching: Just as you might draw a diagram to show connections between people, Cypher uses simple visual patterns to represent these relationships. For example, you might use:

()to represent nodes.-->to represent directed relationships (like "follows" on social media).--for undirected relationships (like "friends with").

Query Structure: A Cypher query typically includes:

MATCH: To specify the pattern of nodes and relationships you're looking for.

WHERE: To filter the results based on certain criteria.

RETURN: To specify what data you want to retrieve.

CREATE: Incase you want to “insert” data.

Cypher Example - Loading Data

To load data into a GraphDB we need to first create nodes, and then specify the relationships between them, in that order. Continuing our example of people, here’s how we’d create a small network of friends:

MATCH: Finds patterns that match nodes.

CREATE: inserts the node and/or relationship.

MERGE: not shown here, is combination of MATCH & CREATE to ensure uniqueness and avoid duplicating entries in the GraphDB.

Cypher Example - Querying:

What if we want to find all the friends of a person named "Alice"? Here's how you might write this query in Cypher:

MATCH: Finds patterns where a person named Alice has a friendship relationship (FRIEND_OF) with another person.

RETURN: Retrieves and displays the names of Alice's friends.

Let’s unpack the query a little more:

Nodes and Relationships: In this query

(alice:Person {name: 'Alice'})represents the node for Alice, who is a person.[:FRIEND_OF]represents the friendship relationship.(friend:Person)represents the nodes for Alice's friends, who are also persons.

Pattern Matching: This query matches the pattern of Alice being connected to her friends through a FRIEND_OF relationship.

Filtering and Returning Data:

We specify that we are looking for a person named Alice.

We retrieve and return the names of the people who are friends with Alice.

What is Burr?

Burr is a lightweight Python library you use to build applications as state machines. You construct your application out of a series of actions (these can be either decorated functions or objects), which declare inputs from state, as well as inputs from the user. These specify custom logic (delegating to any framework), as well as instructions on how to update state. State is immutable, which allows you to inspect it at any given point. Burr handles orchestration, monitoring, persistence, etc…).

You run your Burr actions as part of an application – this allows you to string them together with a series of (optionally) conditional transitions from action to action.

Burr comes with a user-interface that enables monitoring/telemetry, as well as hooks to persist state/execute arbitrary code during execution.

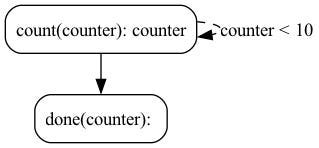

You can visualize this as a flow chart, i.e. graph / state machine:

And monitor it using the local telemetry debugger:

While the above example is a simple illustration, Burr is commonly used for RAG applications (like in this example), chatbots, and human-in-the-loop AI interfaces. See the repository examples for a (more exhaustive) set of use-cases.

What is Hamilton?

Hamilton, developed by DAGWorks Inc., is an open-source framework designed to help data scientists and engineers create, manage, and optimize dataflows using Python functions. At its core, Hamilton transforms these functions into a Directed Acyclic Graph (DAG) that captures the dependencies between transformations. This approach facilitates the creation of modular, testable, and self-documenting data pipelines that encode lineage and metadata, making it easier to manage and understand data processes.

Hamilton runs anywhere that Python runs, and runs inside systems like Jupyter, Airflow, FastAPI, etc.

Key features of Hamilton include:

Modular Dataflows: Each function in Hamilton represents a specific data transformation, making it easier to build and maintain complex workflows.

Automatic DAG Creation: Functions are automatically connected into a DAG based on their dependencies, allowing for efficient execution and optimization.

Visualization and Monitoring: Hamilton provides optional tools to visualize and monitor dataflows, enhancing transparency and debugging capabilities.

Integration with Existing Tools: It can be used alongside other data processing tools and libraries such as Pandas, Dask, and Ray, allowing for seamless integration into existing workflows.

Hamilton is particularly useful for building scalable data processing pipelines, that are unit testable & documentation friendly, which ensures that the codebase remains organized and maintainable as projects grow in complexity.

How does Hamilton work?

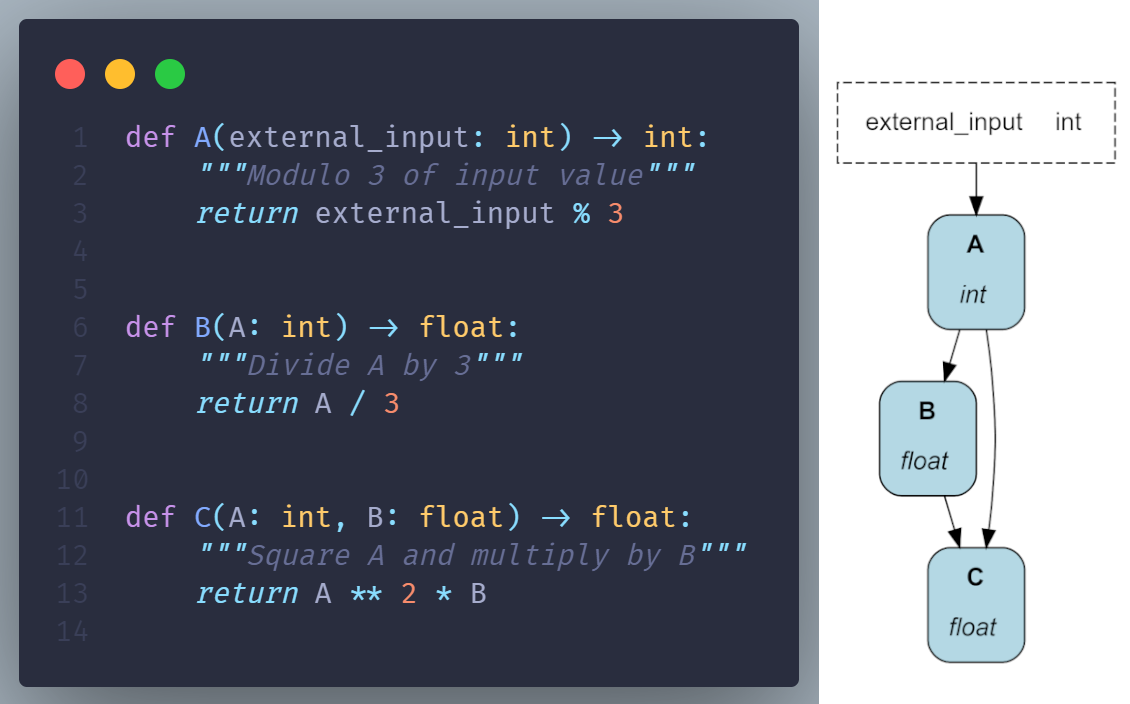

Hamilton uses a declarative approach to expressing a DAG. Developers write regular Python functions to declare what can be computed using the function name, in Hamilton they’re called “nodes”, while each Python function also declares dependencies using the function parameter name and type.

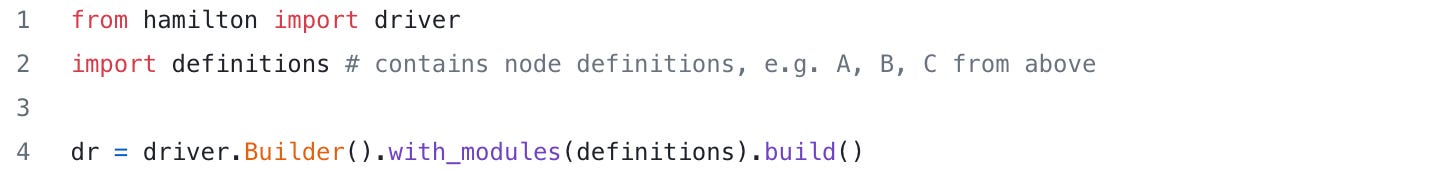

Contrary to imperative approaches, Hamilton is responsible for loading definitions and automatically assembling the DAG. This is done through the Driver object:

To execute code, users request nodes and Hamilton determines the recipe to compute them on the fly.

Compute all nodes and only return value for “C”:

Compute only nodes “A” & “B” and return value for “B”:

Compute all nodes and return their values:

RAG Example

You can follow along with all the code here. Note, simplified versions of this example also appear in the Hamilton & Burr repositories. Also gists of these code snippets can be found here.

First let’s get some data into FalkorDB, then let’s talk about inference. Also note, for those new to Hamilton and/or Burr, we also include versions of ingestion (ingest.py) and inference (QA.py) that don’t use them, so you can more clearly see what they bring to the table: testable, easy to iterate on, and maintainable code.

Ingestion

Files: hamilton_ingest.py, ingest_fighters.py, ingest_fights.py, ingest_notebook.ipynb.

GraphDBs are generally schema-less, meaning you can push a lot of data in as a “python dictionary”, but otherwise you have to curate this structure to ensure everything connects. From the fight data created we need to first push information about our fighters, before linking them with fight data.

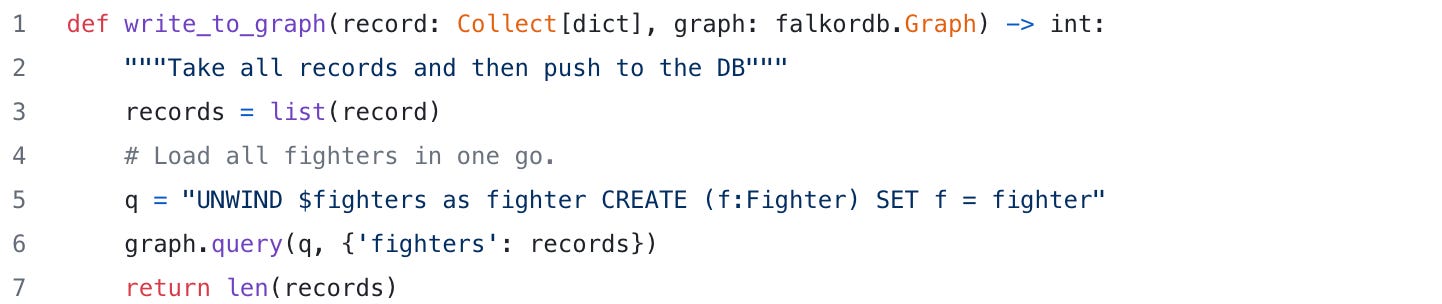

Load fighters: we pull attributes from the CSV (from the provided UFC fight data), and then create a Python dictionary of them for each fighter. Skipping showing the code that creates this dictionary, the salient code to push to FalkorDB is the following:

Each “record” here is things like: data of birth, takedown average, takedown accuracy, stance, reach, etc.

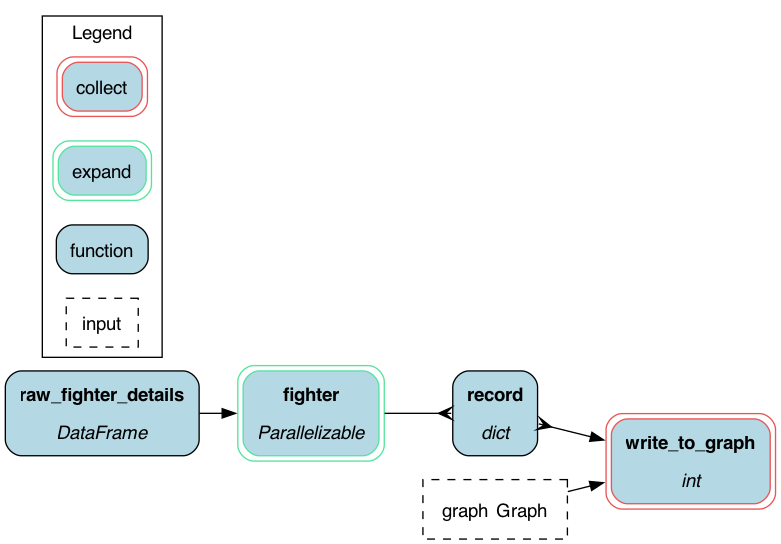

This then populates FalkorDB with information on each fighter. Here’s the Hamilton DAG representing this simple DAG.

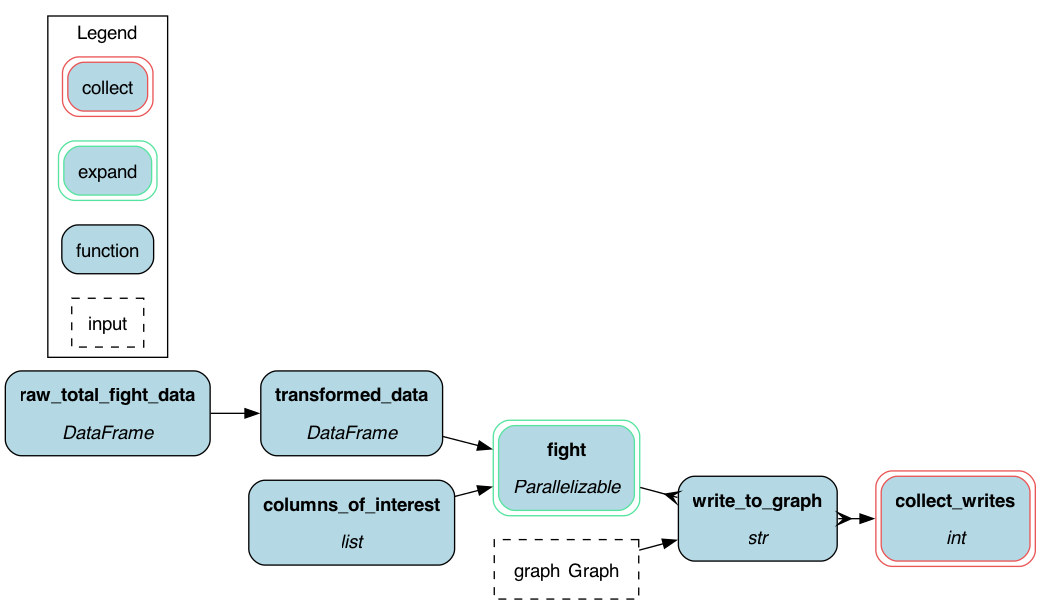

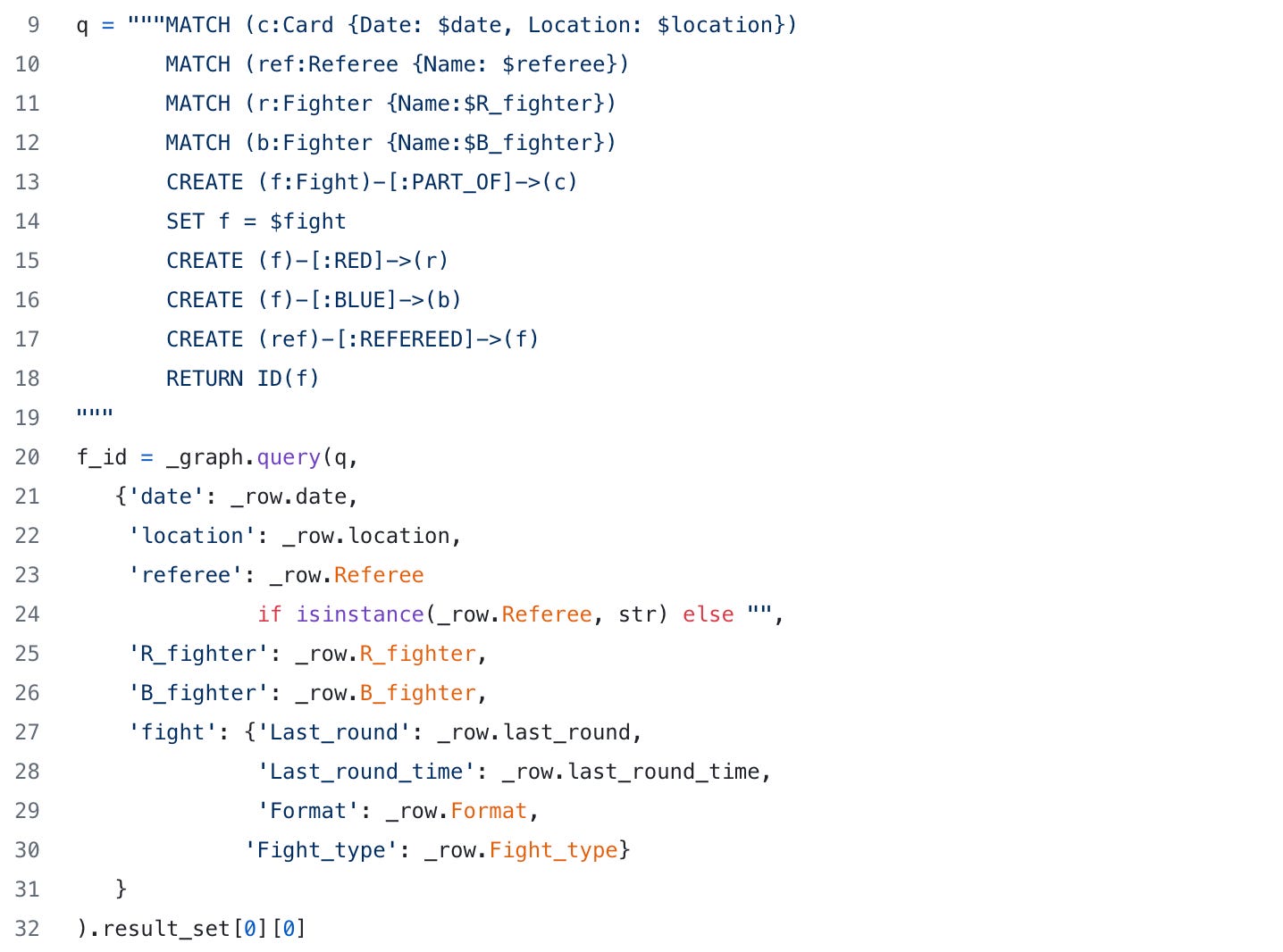

Load fights: once fighters are loaded, we can create our fights. The ingestion looks like the following:

The things that we want to store about fights are things like: "R_fighter", "B_fighter", "last_round", "last_round_time", "Format", "Referee", "date", "location", "Fight_type", "Winner", "Loser". The output of `fight` (the green box above) will contain the aforementioned bits of information, which we will then use to populate FalkorDB with:

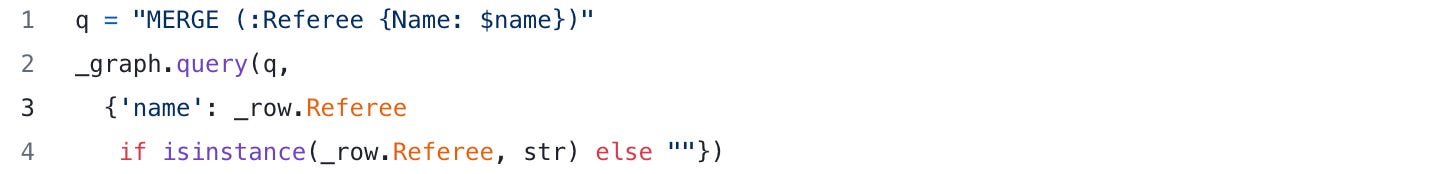

we need to create a referee.

Gist here we need to create a fight card for the fight.

Gist here we then create the fight, by first matching on card, referee, and fighters, and then linking them together with the fight.

Gist here lastly we mark who won or lost:

Gist here

Now we have everything that we need in FalkorDB! Note, FalkorDB has a UI that you can query and visualize some of the relationships stored within it.

Inference

Files: burr_QA.py, or qa_notebook.ipynb.

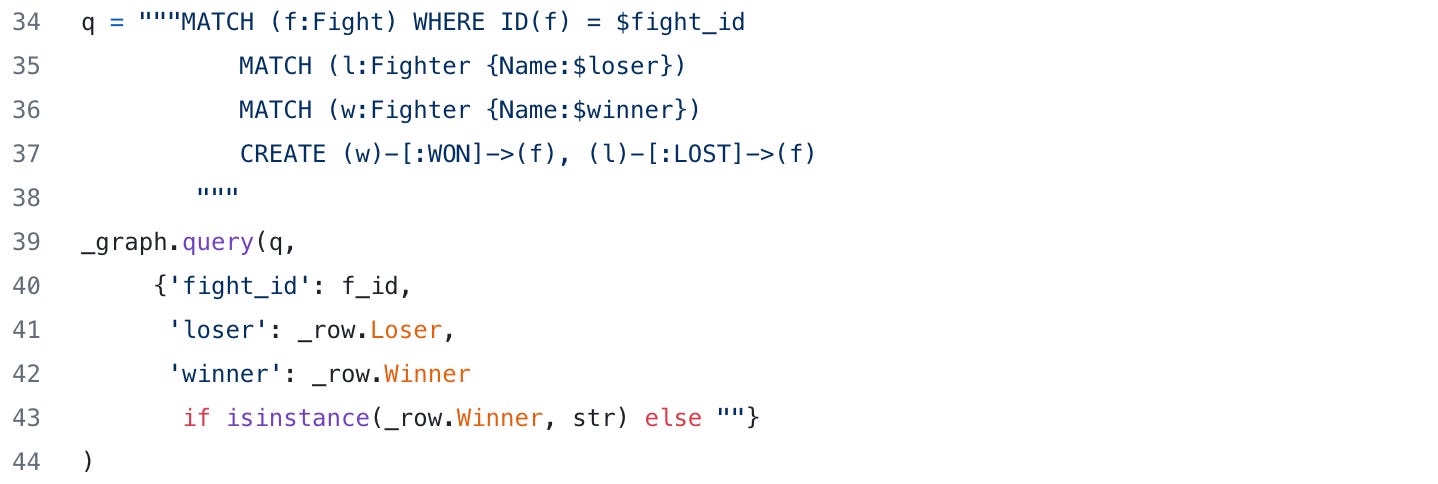

FalkorDB is now ready to be used for RAG. The outline of our conversational agent will look like the following:

Given user input, we need to decide whether we can create a cypher query or not -AI_create_cypher_query.

If so, use the cypher query and query FalkorDB, i.e. via a “tool call” - tool_call.

We then use the results to create a response - AI_generate_response.

We then loop back around - human_converse —> AI_create_cypher_query.

This can be represented as the following “state machine” in Burr:

We’ll now dive deeper into each of these “actions”, and then walkthrough how the Burr application is then constructed.

Creating the Cypher Query

There’s a few things we need to do, to enable creating a cypher query.

Provide a system prompt that tells the LLM what it should do.

Tell the LLM to output the query appropriately. We’re using OpenAI in this example so we’ll use it’s function calling abilities to get structured output back to know when to query FalkorDB or not.

System prompt

As part of our system prompt we provide a schema that tells the LLM what is available in FalkorDB. This is achieved via the `schema_to_prompt()` function. Otherwise our system prompt, uses a one shot learning approach, and looks like the following:

Telling the LLM how to call FalkorDB

For the LLM to know how to call FalkorDB, we also need to provide that context. We help do this by creating two things:

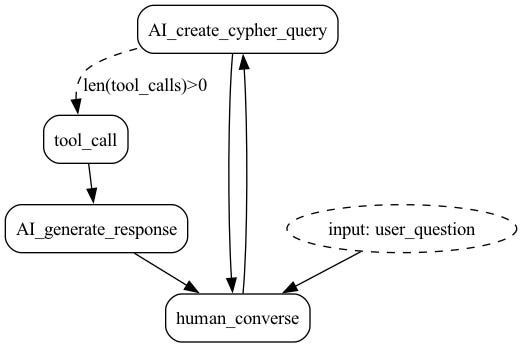

A function for the actual call to FalkorDB — we make sure to include error responses so that the LLM can self correct its query if it gets it wrong:

Gist here A description of what’s required to call it. Note, the signature need not match, what’s important is the argument and how you describe it to the LLM so that it knows what it should create.

Gist here

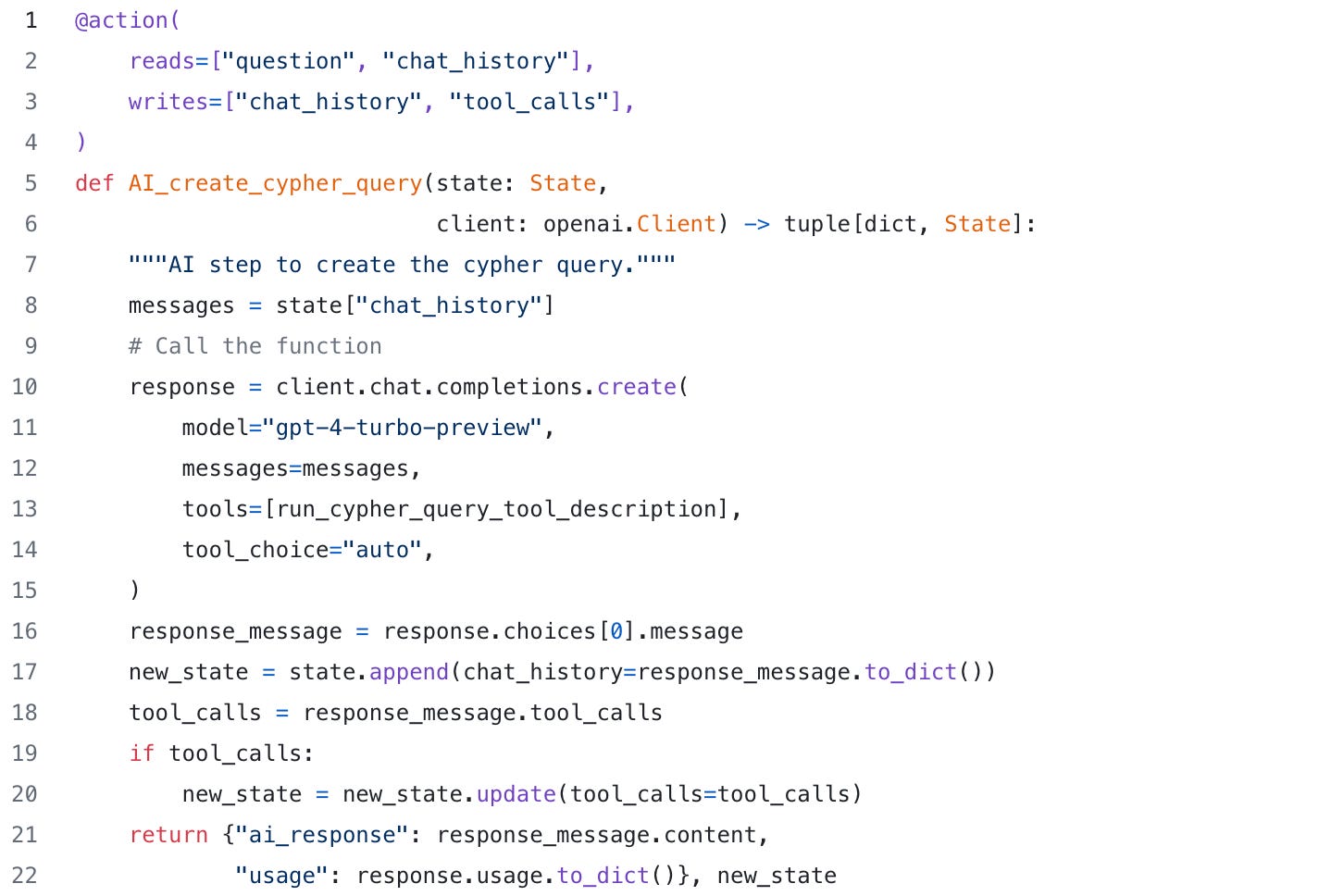

With those two, we can then complete our action to create a query:

Calling FalkorDB

It is a relatively straightforward exercise to execute the query against FalkorDB. We just use the prior functions, and pass in the query that the LLM created. It is possible that the LLM might want to combine the output of multiple queries, so that’s why there is a loop.

Creating a response

Creating the user response is again a straighforward LLM call. Given the prior output, we pass that to the LLM to create a response.

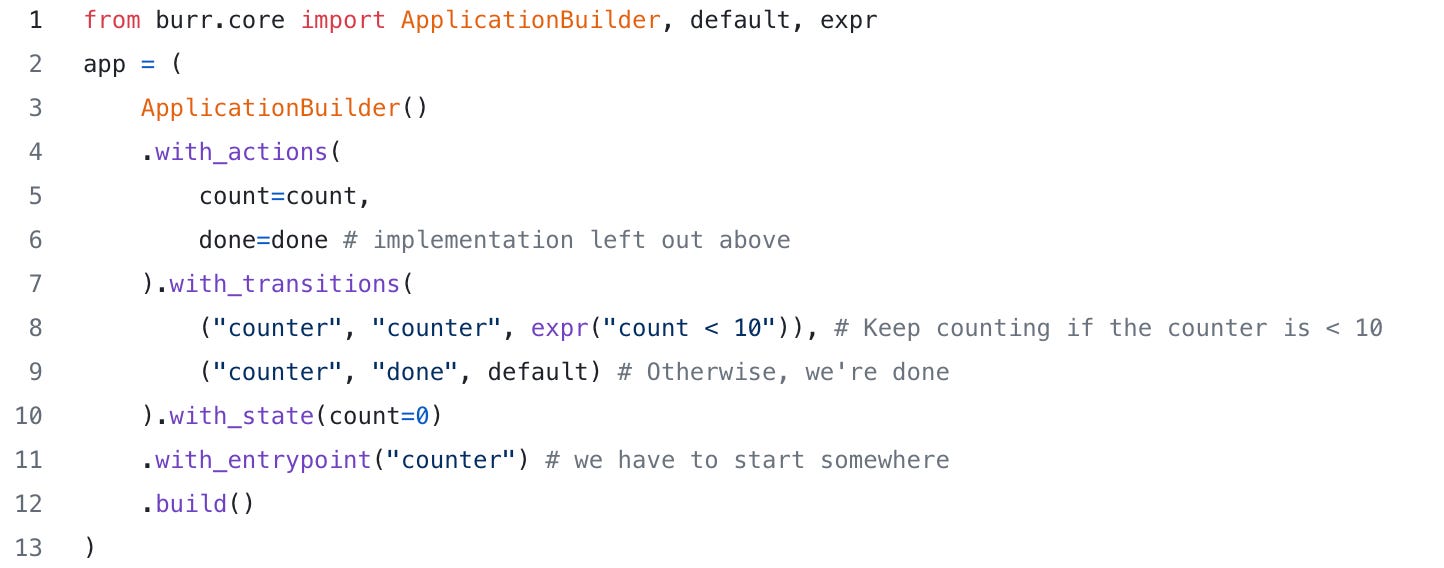

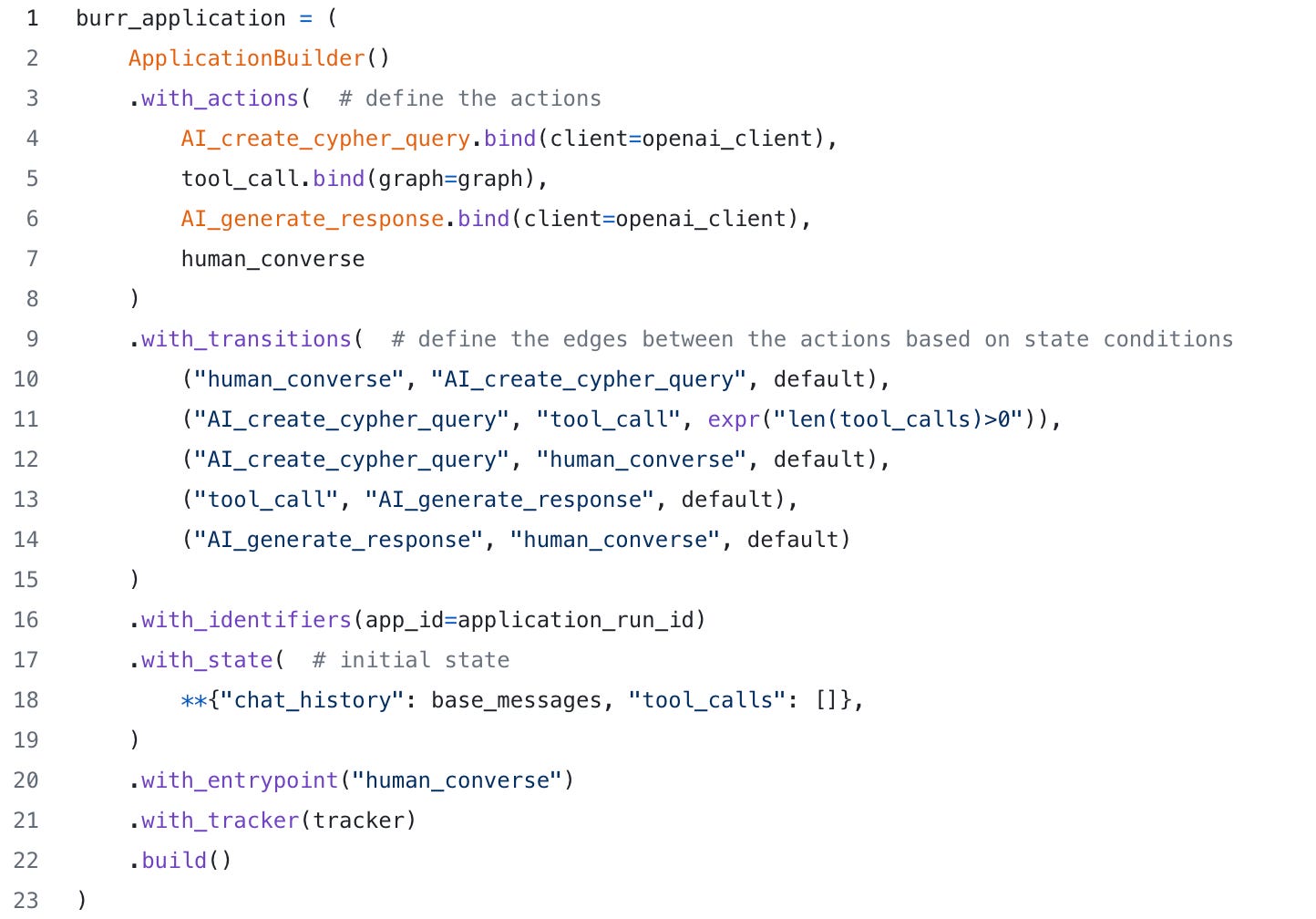

Creating the Burr Application / State Machine

Wiring together the actions above into our state machine would look like the following:

Salient points in the code to understand above:

We only transition to calling FalkorDB if we have cypher queries to call. That’s expressed via the

expr("len(tool_calls)>0")condition. Note, order of transitions matter in Burr, so conditional transitions need to come before default ones.We set the initial state, that’s how the system message is always in the chat history at the beginning.

We set a local tracker that will log traces for us to be able to view/debug (see below for more details).

Running our agent

To run our agent, then it’s just a matter of looping until we want to quit:

Congratulations, you now have a fully functioning RAG agent that uses a GraphDB.

Let’s ask some questions

Here’s some sample questions to ask:

Which fighter holds the fastest win?

AI: The fighter who holds the fastest win is Jorge Masvidal, achieving a victory in the first round within just 5 seconds.Who has a 50% win rate?

AI: The list of fighters who have a 50% win percentage includes names like Joe Slick, Ken Stone, Kevin Jackson, Lorenz Larkin, and many others. This list comprises fighters who have an equal number of wins and losses within the records captured in the knowledge graph.Who has the most wins without any losses?

AI: The fighter with the most wins and 0 losses is Khabib Nurmagomedov, with a total of 13 wins.There’s plenty more that you can ask! Note for more complex questions, you might need to prompt the LLM to think through what’s required to help it formulate the Cypher query.

For example, you might encounter the following if you just ask the question this way:

Which fighter has the best winning percentage ranked by number of fights?

AI: It appears there was a problem executing the query to find the fighter with the best winning percentage ranked by the number of fights. Unfortunately, without the successful execution of the query, I cannot provide the requested information based on the data from the knowledge graph.

To analyze fighter statistics accurately, including wins, losses, and total number of fights to calculate the winning percentage, a correct and executable query is essential. The query needs to properly aggregate and calculate counts of wins and losses for each fighter and then derive the winning percentage from these figures. Adjustments to the query structure might be necessary to address the encountered error and to achieve the desired output effectively.

Please let me know if you would like me to attempt a different approach or if there's anything else specific I can assist you with.

Instead you might need to instruct it a bit:

Which fighter has the best winning percentage ranked by number of fights? Please think through the steps needed to calculate this, and then create the cypher query and execute it.

AI: The query returned an extensive list of fighters with varying win-loss records. Here are a few examples extracted from the list, highlighting fighters with the best winning percentages:

1. **Fighters with 100% Winning Percentage (Sorted by the number of fights won)**

- Khabib Nurmagomedov: 13 wins out of 13 fights

- Kamaru Usman: 13 wins out of 13 fights

- Alexander Volkanovski: 9 wins out of 9 fights

- (and many others with fewer fights but 100% win rates)

2. **Fighters with High Winning Percentages (But not 100%)**

- Jon Jones: 20 wins out of 21 fights (95.238%)

- Amanda Nunes: 14 wins out of 15 fights (93.333%)

- Georges St-Pierre: 20 wins out of 22 fights (90.909%)

The list also includes fighters with varying numbers of fights and win rates, including those with a 0% winning percentage.

For a complete list or any specific queries about fighter records, please let me know!Inspecting / debugging what happened

It’s important that you think about how to debug these types of applications and write code in a way that makes this easy. Thankfully with Burr, you don’t have to do much. Why? Well there isn’t much of a need to see to log what’s going on, because the framework can take care of that for you. To see what I mean, after you’ve run your Burr application, you can start up the open source UI by typing burr on the command line and navigating to the UI on http://localhost:7241. You’ll then see the trace of your last conversation.

This is useful to help debug or understand cases where the LLM struggled to produce the right query; allowing you to replay/retry from that exact same spot in easily. For example, if you’re trying to do more complex asks, it’ll expose the intermediate state, e.g. the query, so you can see what it tried to run, etc. You can then pair this with the FalkorDB UI to verify/validate the queries.

For a quick overview of how to use the Burr UI to debug we invite you to read this post:

Comparison to a Vector DB

There’s a lot of buzz about Vector DBs being able to solve everything. However here’s a clear and hyperbolic example where that’s not the case. Yes, this should be an obvious example where you shouldn’t use a Vector DB (that data is structured & not text), but you’d be surprised how often people think all the need is a Vector DB for RAG.

Thus, in the repository we also include an implementation that also uses a Vector DB, instead of FalkorDB. If you go along with the instructions on how to populate the Vector DB, you’ll notice that inference isn’t anywhere near as good. E.g. just ask the same questions and you’ll see you’ll get very different responses.

Why? Vector DBs operate based on semantic similarity via a distance measure over vectors. Other than perhaps asking which fighters seem the most similar, the ability to answer and query over attributes is greatly reduced to non-existent, which then limits what an LLM can do with the context it returns. TL;DR: for knowledge based data, a GraphDB is a great choice to power a conversational RAG bot/agent.

Summary

By integrating Hamilton, Burr, and FalkorDB, we've demonstrated how to create a modular conversational RAG agent capable of navigating and querying complex UFC fight data. This approach leverages the strengths of each tool: Hamilton's production ready way to define data transformations, Burr's ability to execute and easily introspect and understand agent/application orchestration, and FalkorDB to house the actual data. Together, they form a cohesive and modular system that can be taken to production. Whether you're exploring new ways to utilize your data or building sophisticated AI agents, this example provides a solid foundation to expand upon.