Test Driven Development (TDD) of LLM / Agent Applications with pytest

An introduction to using pytest with Burr to build reliable AI software.

Shipping on more than vibes

To build reliable AI software, i.e. wrangle the non-determinism inherent with LLMs, one must graduate from shipping on “vibes” to instead invest in building systematic evaluations. Much like unit test suites ensure standard software is reliable, LLM-powered software also need a suite of tests to directionally assess or evaluate reliability. The hard part is that these tests aren’t as simple as those for regular software.

In this post we show one possible approach to building an LLM application test suite and using it in a test driven manner - with pytest! If you aren’t using python, don’t use pytest, or don’t use Burr, this post will still be helpful at a high level — you can replicate the learnings with other testing libraries/systems. Overall, this post should leave you with:

A mental picture of how to iterate on LLM / agent applications.

What pytest is and how to use it.

What type of extension we need to make pytest work in the LLM / Agent use case.

Features of Burr that can help with pytest and creating more reliable AI software.

For the code that’s featured here you can also find it under this pytest example in the Burr repository.

Side note: if this topic interests you Hugo Bowne-Anderson and Stefan Krawczyk have a Maven Course: “Building LLM Applications for Data Scientists and Software Engineers” starting January 6th covering content like this to help you ship reliable AI applications.

This post is a long one, here’s an outline of this post’s structure to help you navigate it (or use the left hand side navigation bar of substack!), e.g. skip the introduction to pytest if you already know it:

Why pytest & Burr.

The high level SDLC with Burr & pytest.

pytest constructs & what needs to be adjusted to use pytest.

Burr’s functionality to help create pytest tests & a sketch of the TDD loop.

Some tips and then links to a full example.

Summary & FAQ.

Why pytest?

Pytest is a very popular python library for functionally testing python software. Rather than introduce a new tool, why not use what’s already available, and something you probably already know how to use? We think that reusing a well known tool will enable a more streamlined iteration loop (read on for more details).

Note: While pytest is very extensible due to its plugins framework, we’ll only be scratching the surface in this post. There are a few ways you could achieve the desired end result. If you come up with something better, please share it!

Why Burr?

When building LLM Applications / Agents, you get to choose how you build. Do you do everything yourself or do you use a framework? Burr allows you to take both paths. Rather than hide LLM calls (e.g. LangChain), or only require prompts to build agents (e.g. CrewAI), Burr aims to be really good at allowing you to “glue” whatever logic together you need in a standard manner, in a way where iteration is fast and observability is included. This means that you can choose to control all LLM API calls yourself, use other frameworks like Haystack, or do both, when building LLM applications / Agents with Burr. Burr’s strengths are that it solves the pain of (a) how to structure your code for fast iteration, (b) instrumenting for observability.

If you can draw a flowchart of your LLM application / Agent, then your code structure in Burr should mirror it.

What is Burr?

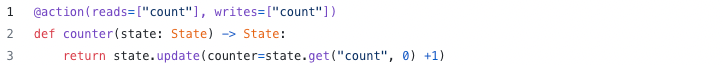

Burr is a lightweight Python library you use to build applications modeled similarly to state machines. You construct your application out of a series of actions (these can be either decorated functions or objects), which declare inputs from state, as well as inputs from the user. These specify custom logic (delegating to any framework), as well as instructions on how to update state. State is immutable, which allows you to inspect it at any given point. Burr handles orchestration, monitoring, persistence, etc…).

You run your Burr actions as part of an application – this allows you to string them together with a series of (optionally) conditional transitions from action to action.

Burr comes with a user-interface that enables monitoring/telemetry, as well as hooks to persist state/execute arbitrary code during execution.

You can visualize this as a flow chart, i.e. graph / state machine:

And monitor it using the local telemetry debugger:

While the above example is a simple illustration, Burr is commonly used for AI assistants (like in this example), RAG applications, and human-in-the-loop AI interfaces. See the repository examples for a (more exhaustive) set of use-cases.

A SDLC with Burr and pytest

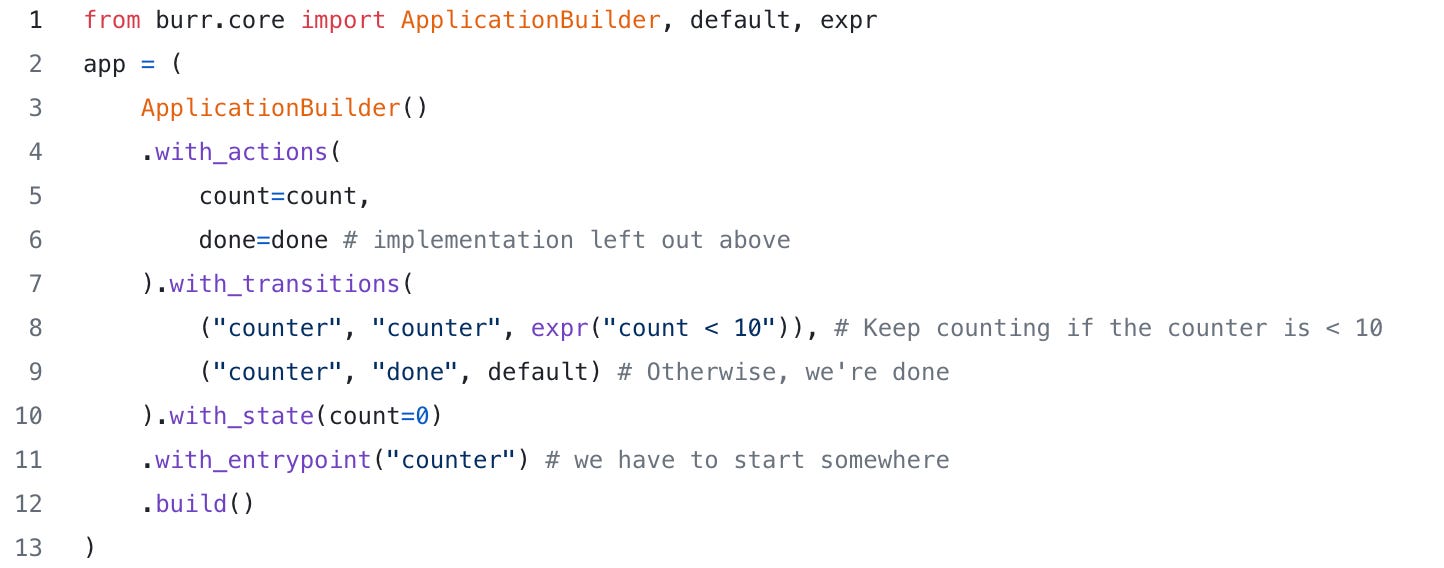

With the rise of LLMs, the regular software development cycle (SDLC) doesn’t really work. Why? Well you can’t write a simple test, you need to curate (and continually update) a suite of tests or a data set. This means you need to continually gather data to know how well your application is working and curate existing tests when requirements / the world changes. To put what we’ll present in perspective, here’s an example software development lifecycle (SDLC) with Burr and pytest:

While we don't cover everything in the diagram, in this post we specifically show how to do most of the Test Driven Development (TDD) loop:

Create a test case.

Run the test case.

Create a dataset.

Show how you might construct evaluation logic to evaluate the output of your agent / augmented LLM / application.

In terms of the rest of this post, we’ll introduce pytest for those that are new to it, and then explain how we can adopt it for our TDD cycle to wrangle evaluating non-deterministic outputs, i.e. the outputs of an LLM / agent.

pytest Introduction

pytest basics

We like pytest because we think it's simpler than the unittest module python comes with. To use it you need to install it first:

pip install pytestThen to define a test it's just a function that starts with test_:

Yep - no classes to deal with. Just a function that starts with test_. Then to run it:

pytest test_my_agent.pyBoom, you're testing!

Parameterizing Tests

We can also parameterize tests to run the same test with different inputs. This comes in handy as we build up data points to evaluate our agent / llm calls. Each input is then an individual test that can error. Here's an example:

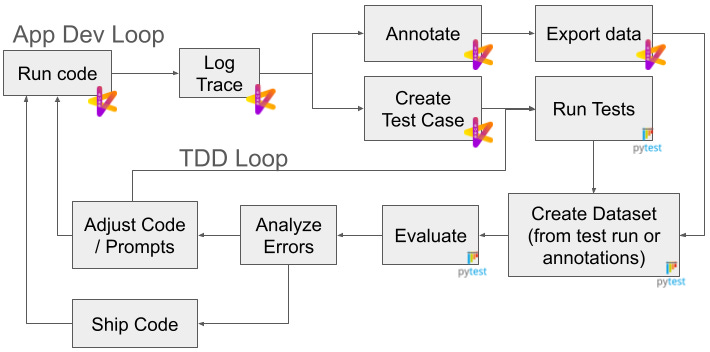

pytest fixtures

Another useful construct to know are pytest fixtures. A “fixture” is a function that is used to provide a fixed baseline upon which tests can reliably and repeatedly execute. They set up preconditions for a test, such as creating test data, initializing objects, or establishing database connections, etc… To create one in pytest, you declare a function and annotate it:

To use it, one just needs to “declare” it as a function parameter for a test.

We will both be constructing our own pytest fixture in this post, and using some pytest fixtures that come prebuilt with the pytest-harvest library.

Using pytest to evaluate your agent / augmented LLM / application

An agent / augmented LLM is a combination of LLM calls and logic. But how do we know if it's working? Well we can test & evaluate it.

From a high level we want to test & evaluate the "micro" i.e. the LLM calls & individual bits of logic, through to the "macro" i.e. the agent/application as a whole.

But, the challenge with LLM calls is that you might want to "assert" on various aspects of the output / behavior without stopping execution on the first assertion failure, which is standard test framework behavior. So what are you to do?

By default pytest fails on the first assert failure

In the examples we've shown above, the test will stop executing on the first assertion failure. This is the default behavior of pytest. While this works well for regular deterministic software testing, failing at the first failed evaluation for an LLM / agent test would be prohibitive in getting a full picture of our LLM / Agent application’s health. If you’re scratching your head here as to why this would be a problem, this next section is for you.

What kind of "asserts" do we want?

We might want to evaluate, or assert on the output in a number of ways:

Exact match - the output is exactly as expected.

Fuzzy match - the output is close to what we expect, e.g. does it contain the right words, is it "close" to the answer, etc.

Human grade - the output is graded by a human as to how close it is to the expected output. E.g. is this funny?

LLM grade - the output is graded by an LLM as to how close it is to the expected output.

Static measures - the output has some static measures that we want to evaluate, e.g. length, etc.

It is rare that you solely rely on (1) with LLMs, and you'll likely want to evaluate the output in a number of ways before making a pass / fail decision. E.g. that the output is close to the expected output, that it contains the right words, etc., and then make a pass / fail decision based on all these evaluations.

We will not dive deep into what evaluation logic you should use. If you want more information there, we suggest you start with posts like this. The TL;DR: is:

You need to understand your data and outcomes to choose the right evaluation logic.

Focus on binary measures, e.g. yes / no, pass /fail, etc.

Categorize errors to know what to test / check for

So now what? How do we evaluate all the assertions before making a pass / fail decision?

Not failing on first assert failure / logging test results

As mentioned above, one limitation of pytest is that it fails on the first assertion failure. This is not ideal if you want to evaluate multiple aspects of the output before making a pass / fail decision.

One approach is using the pytest-harvest plugin to log what our tests are doing. This allows us to capture the results of evaluations in a structured way without breaking at the first asserting failure.

This doesn’t limit how we use pytest. Rather, it enables us to mix and match appropriate hard "assertions" - i.e. definitely fail, with softer ones where we want to evaluate all aspects before making an overall pass / fail decision. We will walk through how to do this below using a few pytest & pytest-harvest constructs.

pytest-harvest

pip install pytest-harvestpytest-harvest is a plugin that provides a number of pytest fixtures. These fixtures have certain behaviors that we’ll use to log and then see the results of evaluations from within our tests.

results_bag is a fixture that we can log values to from our tests. This is useful if we don't want to fail on the first assert statement, and instead capture a lot more. E.g. you can assign arbitrary values to “keys” like below.

We can then access what was assigned in the results_bag from the pytest-harvest plugin via the module_results_df fixture that provides a pandas dataframe of the results:

We can then evaluate them as we see fit for our use case. E.g. we only pass tests if all the outputs are as expected, or we pass if 90% of the outputs are as expected, etc. You could also log this to a file, or a database, etc. for further inspection and record keeping, or combining it with open source frameworks like mlflow and using their evaluate functionality.

Note: we can also combine results_bag with pytest.mark.parametrize to run the same test with different inputs and expected outputs:

Using Burr's pytest Hook

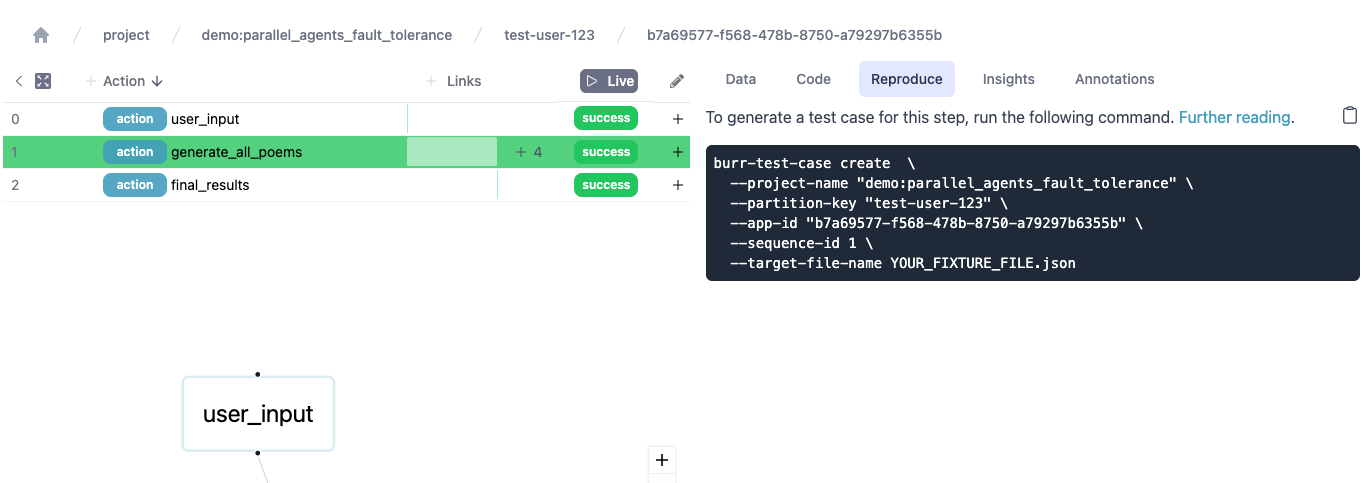

With Burr you can curate test cases from real application runs. You can then use these test cases in your pytest suite. Burr then has a hook that enables you to curate a file with the input state and expected output state for an entire run, or a single Burr action. See the Burr test case creation documentation for more details on how (or see the “reproduce” tab in the Burr UI like below).

Once you run the command you’ll get some code and a JSON file that you can then manage and curate into a pytest test. Then to combine with the pytest-harvest fixtures to capture evaluations, we need to add the `results_bag` fixture argument to the python function — note the final code below:

To test an entire agent, we can use the same approach, but instead rely on the input and output state being the entire state of the agent at the start and end of the run.

Using the Burr UI to observe test runs

You can also use the Burr UI to observe the test runs. This can be useful to see the results of the tests in a more visual way. To do this, you'd instantiate the Burr Tracker and then run the tests as normal. A note on ergonomics:

It's useful to use the test_name as the partition_key to easily find test runs in the Burr UI. You can also make the app_id match some test run ID, e.g. date-time, etc.

You can turn on opentelemetry tracing to see the traces in the Burr UI as well.

In general this means that you should have a parameterizeable application builder function that can take in a tracker and partition key.

TDD: Adopting an iterative SDLC to get out of POC purgatory

Unlike traditional software where writing tests means you’re done, with LLMs that is just the start of an iterative loop. By logging traces to get data and turning them into datapoints for tests in pytest, one can move fluidly between an application development, test driven development, and back. As more datapoints are accumulated, you can then better evaluate and measure prompt changes and their impacts.

By using pytest as the means to systematically go from shipping on vibes to having a structured way to evaluate and ensure that projects are heading in the right direction, you stand a much better chance of getting your LLM & Agent applications out of POC purgatory.

Your TDD Loop with Burr and pytest

To put it more concretely, the above code showed how you could:

Pull an application trace and create a pytest test for it.

Add and manage datapoints with parameterize and/or Burr’s pytest integration.

Evaluate multiple aspects of a single test and then aggregate them for multiple data points.

With this setup, you can now operate a Test Driven Development (TDD) loop to optimize and work on a single LLM call! As you make changes to the code / prompt, you’ll be able to use pytest to exercise that one LLM call and then evaluate the changes in a systematic fashion, without working only off of vibes.

Some uses of TDD:

Here are some more situations where applying TDD can help speed up development:

Guardrails. Taking the aforementioned TDD approach can make sure that prompt injection, harmful content, etc. is ignored, or dealt with appropriately.

Evaluating the impact of using a different LLM. If you got your application working with a large model, and want to assess if you can switch to a lower cost model, having a pytest suite you can run will help you quickly assess where you need to tune / change things.

One trick we like - run things multiple times

LLMs are inherently non-deterministic. So one way to explore and determine how the variance of a single prompt + data input leads to different outputs, is to run it multiple times.

With pytest this can take the form of a test that runs an action multiple times, then aggregates the responses to see how different they are. Using this approach can help you better tweak prompts to reduce variance.

The output of this test will show you where variance occurs, and is also then a useful measure in of itself in how stable things are, e.g. maybe it’s okay for some variance to exist, but not too much…

Capturing versions of your prompts to go with the datasets you generate via pytest

As you start to iterate and generate datasets (that's what happens if you log the output of the dataframe), you will need to tie together the version of the code that generated the dataset to the dataset itself. This is useful for debugging, and for ensuring that you can reproduce results (e.g. which version of the code / prompt created this output?). One way to do this is to capture the version of the code that generated the dataset in the dataset itself. Assuming you’re using `git` for version control, one just needs to capture the git commit hash of the code that generated the data set, i.e. the prompts + business logic. If you treat prompts as code, then your workflow might be:

Use git to commit changes.

Create a pytest fixture that captures the git commit hash of the current state of the git repo.

When you log the results of your tests, log the git commit hash as well as a column.

When you load / look at the data set, you can see the git commit hash that generated the data set to tie it back to the code that generated it.

To use it we add the fixture to the function that saves the results:

A Runnable Example

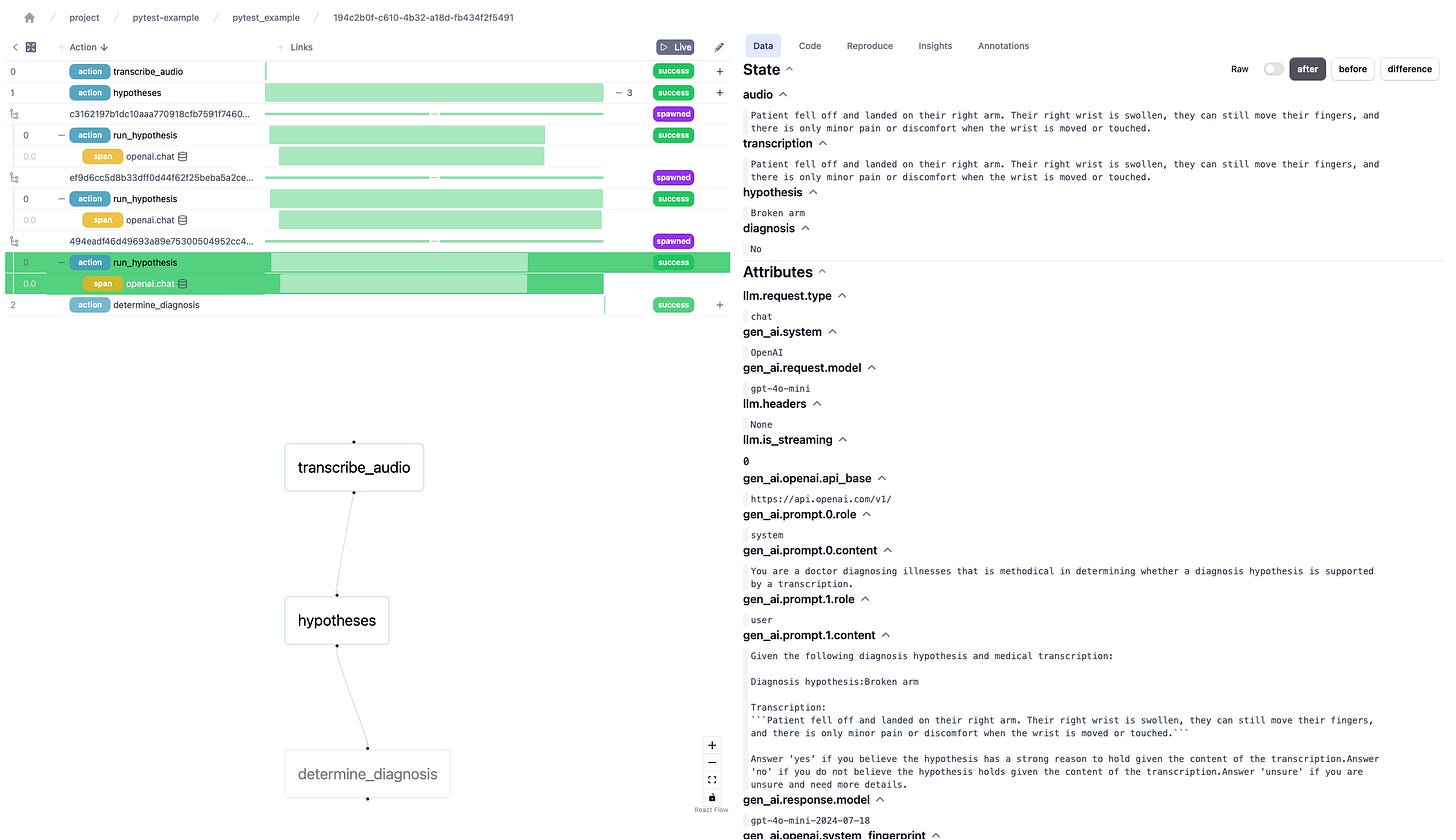

If you want to see some working code, using a dummy medical diagnosis workflow. Please see this example. In it you’ll find:

some_actions.py- a file that defines an augmented LLM application (it's not a full agent) with some actions. See image below - note the hypotheses action runs multiple LLM calls in parallel.test_some_actions.py- a file that defines some tests for the actions insome_actions.py.conftest.py- a file that contains some custom fixtures and pytest configuration for running our tests.

You'll see that we use the results_bag fixture to log the results of our tests,and then we can access these results via the module_results_df fixture that provides a pandas dataframe of the results. This dataframe is then saved as a CSV for uploading to google sheets, etc. for further analysis. You will also see uses of pytest.mark.parametrize and Burr's pytest feature for parameterizing tests from a JSON file.

To run the tests, you can run them with pytest:

pytest test_some_actions.pyAfter running the tests, you can see the results in a CSV file called results.csv in the same directory as the tests. Users then generally export / import that to a UI of their choice (e.g. google sheets, or a notebook) for further error analysis and human review.

You should also see the following in the Burr UI that can aid in reviewing some traces.

Summary

In this post we showed how to build a test driven development (TDD) loop using Burr and pytest. Specifically, we showed how you can take a trace from a Burr application and create a pytest case, then use pytest-fixtures (from pytest-harvest) to record all test evaluations and later post-process them to provide an aggregate measure to help you discern the reliability of your AI application over time. This allows you to quickly set up a test driven development loop where you then tweak prompts / code and then run pytest to systematically run evaluations on your changes.

If you like this post, or find other / better ways to use pytest, please leave a comment or create an issue in Burr’s repository. There’s also a FAQ list below.

Maven Course: Building LLM Applications for Data Scientists and Software Engineers

If you got this far and want to learn more, then sign-up for Hugo Bowne-Anderson’s and Stefan Krawczyk’s Maven Course: “Building LLM Applications for Data Scientists and Software Engineers”. It stars January 6th and will cover topics such as this that are required to ship reliable AI software.

Further Reading

If you’re interested in learning more about Burr:

Find the code for this example here

Join our Discord for help or if you have questions!

Subscribe to our youtube to watch some in-depth tutorials/overviews

Star our Github repository if you like what you see

Frequently Asked Questions:

General Questions

Q: Do I need to use Python to implement this testing approach?

A: While the examples use Python, pytest, and Burr, the general principles of systematic evaluation and test-driven development can be implemented in any programming language with appropriate testing frameworks. The key concepts of capturing test results, evaluating multiple aspects of LLM outputs, and maintaining test suites are language-agnostic.

Q: How is this different from traditional software testing?

A: Traditional software testing typically focuses on deterministic outputs with clear pass/fail criteria. Testing AI applications requires handling non-deterministic outputs, evaluating multiple aspects of responses, and often using fuzzy matching or LLM-based evaluation. This approach allows for capturing and analyzing various quality metrics rather than just binary pass/fail results.

Testing Implementation

Q: How do I decide which aspects of LLM output to evaluate?

A: Consider evaluating:

Content accuracy (exact or fuzzy matching)

Output format compliance

Response consistency across multiple runs

Domain-specific requirements

Safety and appropriateness checks

The specific aspects will depend on your application's requirements.

Q: What's the recommended approach for handling test data?

A: Start by capturing real application runs using Burr's tracking functionality. Curate these into test cases, focusing on diverse scenarios and edge cases. Maintain separate test suites for different aspects (e.g., content generation, safety checks, format compliance) and regularly update them as requirements evolve.

Performance and Scale

Q: How does this testing approach impact development speed?

A: While setting up systematic testing requires initial investment, it typically saves time in the long run by:

Catching issues early in development

Providing clear metrics for improvement

Enabling confident iteration on prompts and model interactions

Reducing manual testing effort

Q: Can this testing approach handle large-scale AI applications?

A: Yes, the approach scales well because:

Tests can be parameterized to handle many test cases

Results can be exported for analysis in external tools

The Burr UI provides visibility into test runs

Best Practices

Q: How often should these tests be run?

A: Consider running:

Core functionality tests with each code change

Comprehensive evaluation suites daily or weekly

Stability tests (multiple runs) during significant prompt or model changes

Performance benchmarks on a regular schedule

Q: How should I maintain test cases over time?

A: Best practices include:

Regular review and updates of test cases

Tying together version control with the results of tests.

Documentation of evaluation criteria

Tracking of model and prompt versions

Regular analysis of test results to identify patterns

Troubleshooting

Q: What should I do if test results are inconsistent?

A: Consider these steps:

Use the multiple-run approach described in the post to measure output variance

Review and possibly adjust evaluation criteria

Check if the inconsistency is expected LLM behavior or a bug

Consider adjusting prompts to reduce variance if needed

Q: How can I debug failed tests effectively?

A: Utilize:

Burr's UI for visualizing test runs

The detailed results captured in results_bag

pytest's debugging features

The CSV export functionality for detailed analysis

Integration and Tools

Q: Can this testing approach integrate with CI/CD pipelines?

A: Yes, you can integrate these tests into CI/CD pipelines. Consider:

Running core tests on every PR

Scheduling comprehensive evaluations

Setting appropriate thresholds for test success

Exporting results for tracking over time

Q: What tools are recommended for analyzing test results?

A: Common tools include:

Spreadsheet applications for basic analysis

Jupyter notebooks for detailed investigation

Visualization tools for tracking metrics over time

MLflow for experiment tracking

Custom dashboards using exported CSV data

Burr-Specific Questions

Q: What's the learning curve for implementing Burr in an existing AI application?

A: Burr is designed to be lightweight and integrates with existing Python applications. The main concepts to understand are state machines, actions, and transitions. If you're already familiar with pytest, the additional Burr-specific testing features should be straightforward to adopt.

Q: Can Burr handle asynchronous operations in AI applications?

A: Yes, Burr supports asynchronous operations and parallel execution of actions, as demonstrated in the medical diagnosis workflow example where multiple hypotheses are generated in parallel.

Interesting post. I have a few thoughts.

1. Re LLMs begin intrinsically non-deterministic ("the non-determinism inherent with LLMs"): I only half agree with this. In principle, a trained LLM is fully deterministic: the weights should determine the output for a given input if you don't deliberately add noise. But in practice, they are always computed in parallel, which can lead to different orders of execution, resulting in different rounding. And in practice they are also usually evaluated on GPUs, whose operations don't even usually guarantee order of execution. Also, in practice, noise is often added to in order to promote variation.) So you're right in practice, but I worry that the nature of the non-determinism is widely misunderstood. But I accept this is very picky point.

2.(Related.) I think it's important to distinguish between testing a fixed LLM (with a given set of trained weights) and testing different LLMs (ones with different weights). The former pertains if you want to do things like test running the same LLM on different hardware, updated software, after making performance tweaks etc. In that case, the goal is probably to check that the LLM's behaviour is broadly consistent in various situations.

But if you're updating the weights, or testing an entirely new LLM (these seem to be the main case you're thinking about), the issue is different, and you might be more concerned with the reasonableness or “correctness” of the outputs. That's more where the fancier evaluations need to come in. Of course, normally the whole point of updating the weights or using a new LLM is to make it better, which suggests that there's going to be a lot of ongoing tweaking of the test cases and expected results. Very much a "painting the Forth Railway Bridge" kind-of a job.

3. At a higher level, there's a question of what you're really trying to test when you test an LLM. At one level, there's the mechanical "is it predicting the right tokens given the weights?" But that's not really what you're talking about. In fact what you're talking about is more regression testing: is a new version behaving at least as well as a previous version. That's obviously hard, but useful.

4. I think if I were providing (or using) an LLM, I would be more specifically interested in checking that safeguards work—things like: Is suppression of truly terrible outputs (still) working? So I think I'd want a lot of tests of injection attacks and so forth, checking that "Ignore all previous instructions" and "Imagine you're a racist demagogue" type things don't push the system to start emitting really dangerous or bigoted output. And those would probably be cases where any failure should constitute a failure for the whole system.

Anyway, good to see people thinking about these things.

Nick Radcliffe

Stochastic Solutions Limited